摘要:计算成像是融合光学硬件、图像传感器、算法软件于一体的新一代成像技术,它突破了传统成像技术信息获取深度(高动态范围、低照度)、广度(光谱、光场、三维)的瓶颈。本文以计算成像的新设计方法、新算法和应用场景为主线,通过综合国内外文献和相关报道来梳理该领域的主要进展。从端到端光学算法联合设计、高动态范围成像、光场成像、光谱成像、无透镜成像、低照度成像、三维成像、计算摄影等研究方向,重点论述计算成像领域的发展现状、前沿动态、热点问题和趋势。端到端光学算法联合设计包括了可微的衍射光学模型,折射光学模型以及基于可微光线追踪的复杂透镜的模型。高动态范围光学成像从原理到光学调制,多次曝光,多传感器融合以及算法等层面阐述不同方法的优点与缺点以及产业应用。光场成像阐述了基于光场的三维重建技术在超分辨、深度估计和三维尺寸测量等方面国内外的研究进展和产业应用,以及光场在粒子测速及三维火焰重构领域的研究进展。光谱成像阐述了当前多通道滤光片,基于深度学习和波长响应曲线求逆问题,以及衍射光栅,多路复用,超表面等优化实现高光谱的获取。无透镜成像包括平面光学元件的设计和优化,以及图像的高质量重建算法。低照度成像包括低照度情况下基于单帧、多帧、闪光灯、新型传感器的图像噪声去除等。三维成像主要包括针对基于主动方法的深度获取的困难的最新的解决方案,这些困难包括强的环境光干扰(比如太阳光),强的非直接光干扰(比如凹面的互反射,雾天的散射)等。计算摄影学是计算成像的一个分支学科,它从传统摄影学发展而来,更侧重于使用数字计算的方式进行图像拍摄。在光学镜片的物理尺寸、图像质量受限的情况下,如何使用合理的计算资源,绘制出用户最满意的图像是其主要研究和应用方向。

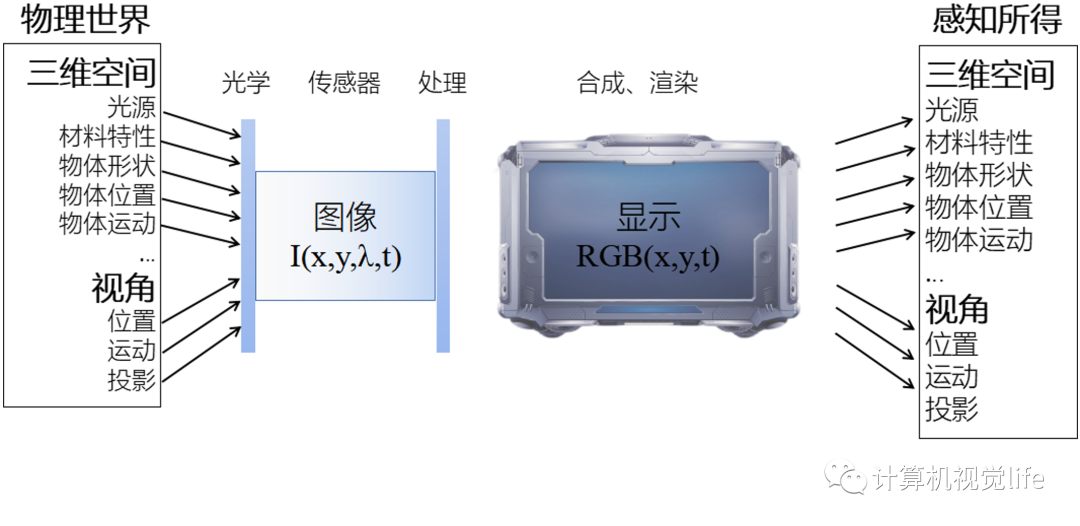

物理空间中,有着多种维度的信息,例如光源光谱,反射光谱、偏振态、三维形态、光线角度,材料性质等。而成像系统所最终成得的像最终决定于,光源光谱,光源位置,物体表面材料光学性质如双向投射/散射/反射分布函数,物体三维形态等。然而,传统的光学成像依赖于以经验驱动的光学设计,旨在优化点扩散函数(Point Spread Function, PSF),调制传递函数(MTF)等指标,目的是使得在探测器上获得更清晰的图像,更真实的色彩。通常“所见即所得”,多维信息感知能力不足。随着光学、新型光电器件、算法和计算资源的发展,可将它们融为一体的计算成像技术逐步解放了人们对物理空间中多维度信息感知的能力,与此同时,随着显示技术的发展,特别是3D甚至6D电影,虚拟现实/增强现实(VR/AR)技术的发展,给多维度信息也提供了展示平台。以目前对物理尺度限制严格的手机为例,使用从目前的趋势看,手机厂商正跟学术界紧密结合。算法层面如高动态范围成像、低照度增强、色彩优化、去马赛克、噪声去除甚至是重打光逐步应用于手机中,除去传统的图像处理流程,神经网络边缘计算在手机中日益成熟。光学层面如通过非球面乃至自由曲面透镜优化像差,通过优化拜尔(Bayer)滤光片平衡进光量和色彩。

本文围绕端到端光学算法联合设计、高动态范围成像、光场成像、光谱成像、无透镜成像、偏振成像、低照度成像、主动三维成像、计算摄影等具体实例全面阐述当前计算成像发展现状、前沿动态,热点问题、发展趋势和应用指导。任务框架如图1所示。

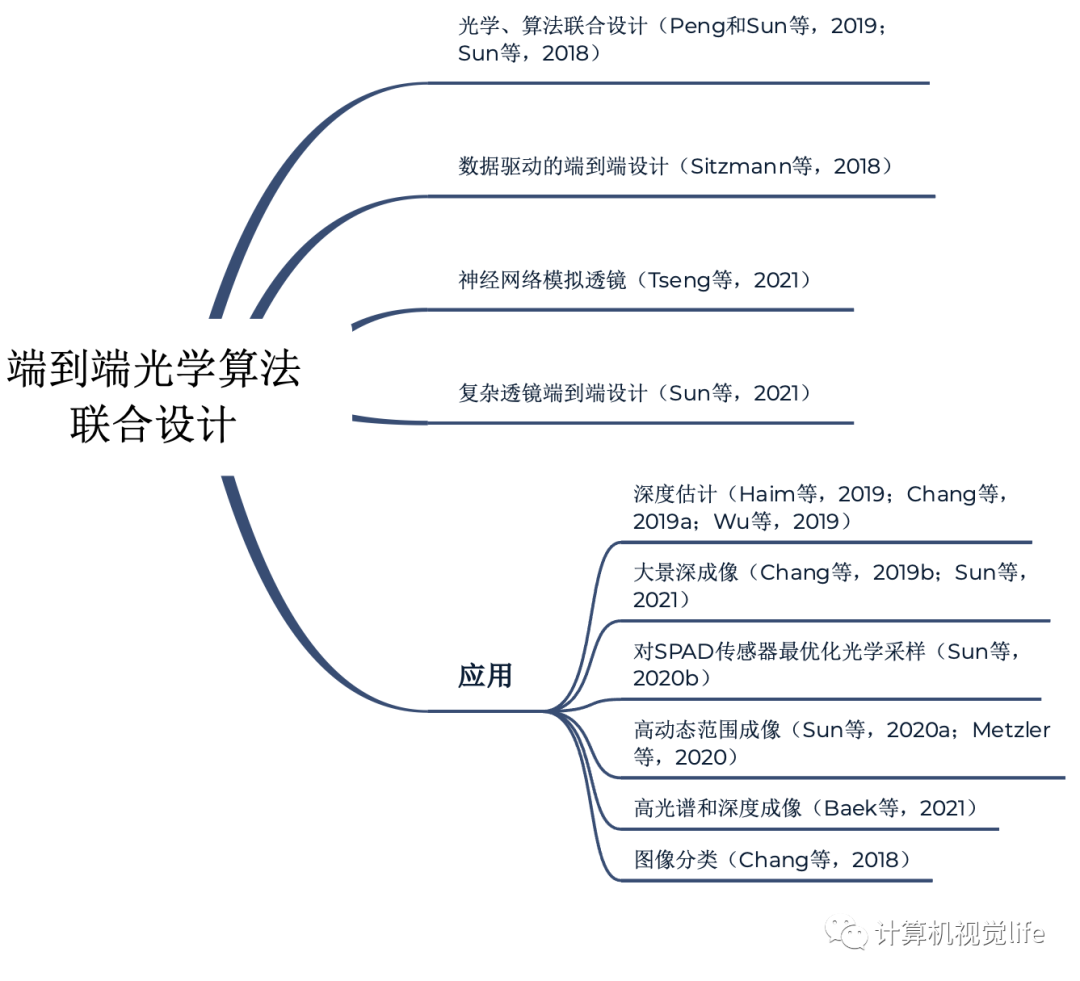

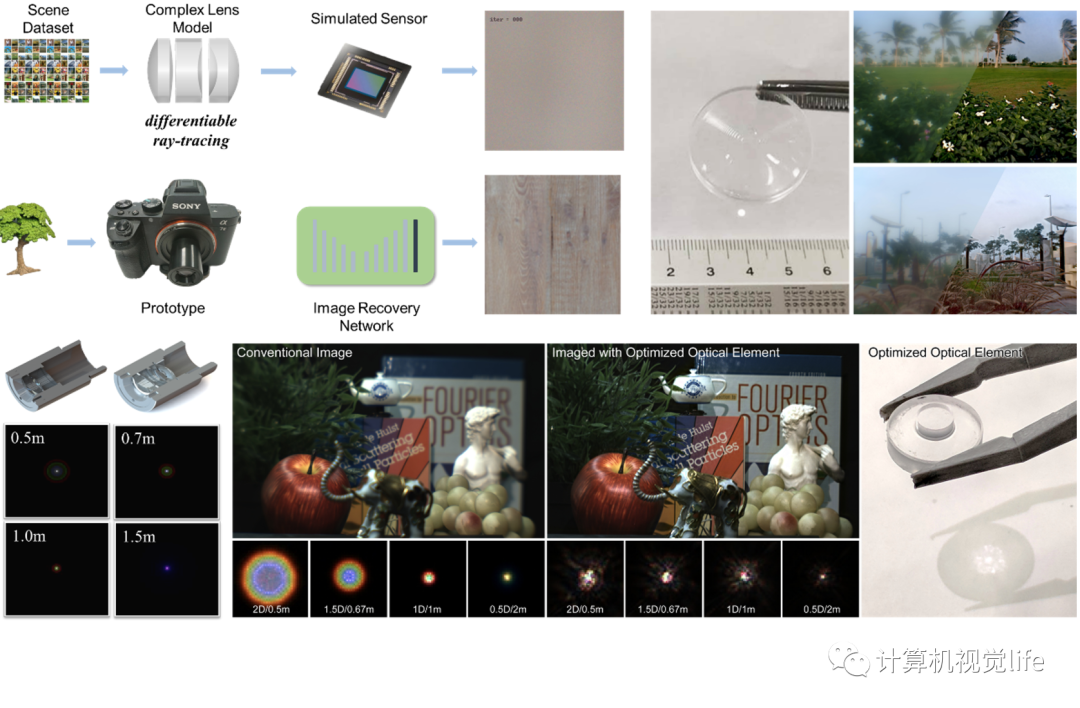

端到端光学算法联合设计(end-to-end camera design)是近年来新兴起的热点分支,对一个成像系统而言,通过突破光学设计和图像后处理之间的壁垒,找到光学和算法部分在硬件成本、加工可行性、体积重量、成像质量、算法复杂度以及特殊功能间的最佳折中,从而实现在设计要求下的最优方案。端到端光学算法联合设计的突破为手机厂商、工业、车载、空天探测、国防等领域提供了简单化的全新解决方案,在降低光学设计对人员经验依赖的同时,将图像后处理同时自动优化,为相机的设计提供了更多的自由度,也将轻量化、特殊功能等计算摄影问题提供了全新的解决思路。其技术路线如图2所示。

图2端到端光学算法联合设计技术路线

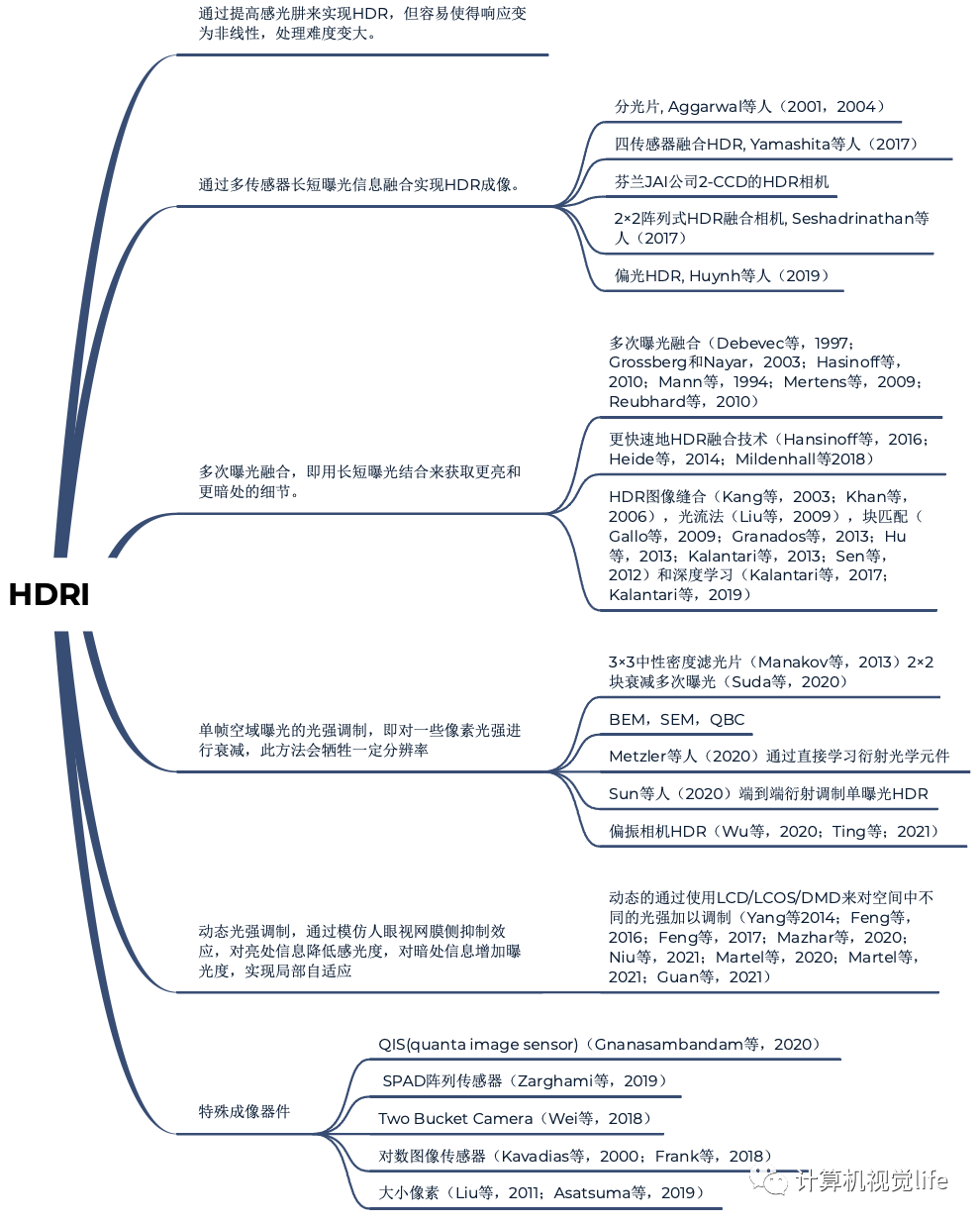

高动态范围成像(high dynamic range imaging,HDR)在计算图形学与摄影中,是用来实现比普通数位图像技术更大曝光动态范围(最亮和最暗细节的比率)的技术。摄影中,通常用曝光值(Exposure Value,EV)的差来描述动态范围,1EV对应于两倍的曝光比例并通常被称为一档(1 stops)。自然场景最大动态范围约22档,城市夜景可达约40档,人眼可以捕捉约10~14档的动态范围。高动态范围成像一般指动态范围大于13档或8000:1(78dB),主要包括获取、处理、存储、显示等环节。高动态范围成像旨在获取更亮和更暗处细节,从而带来更丰富的信息,更震撼的视觉冲击力。高动态范围成像不仅是目前手机相机核心竞争力之一,也是工业、车载相机的基本要求。其技术路线如图3所示。

图3高动态范围成像技术路线

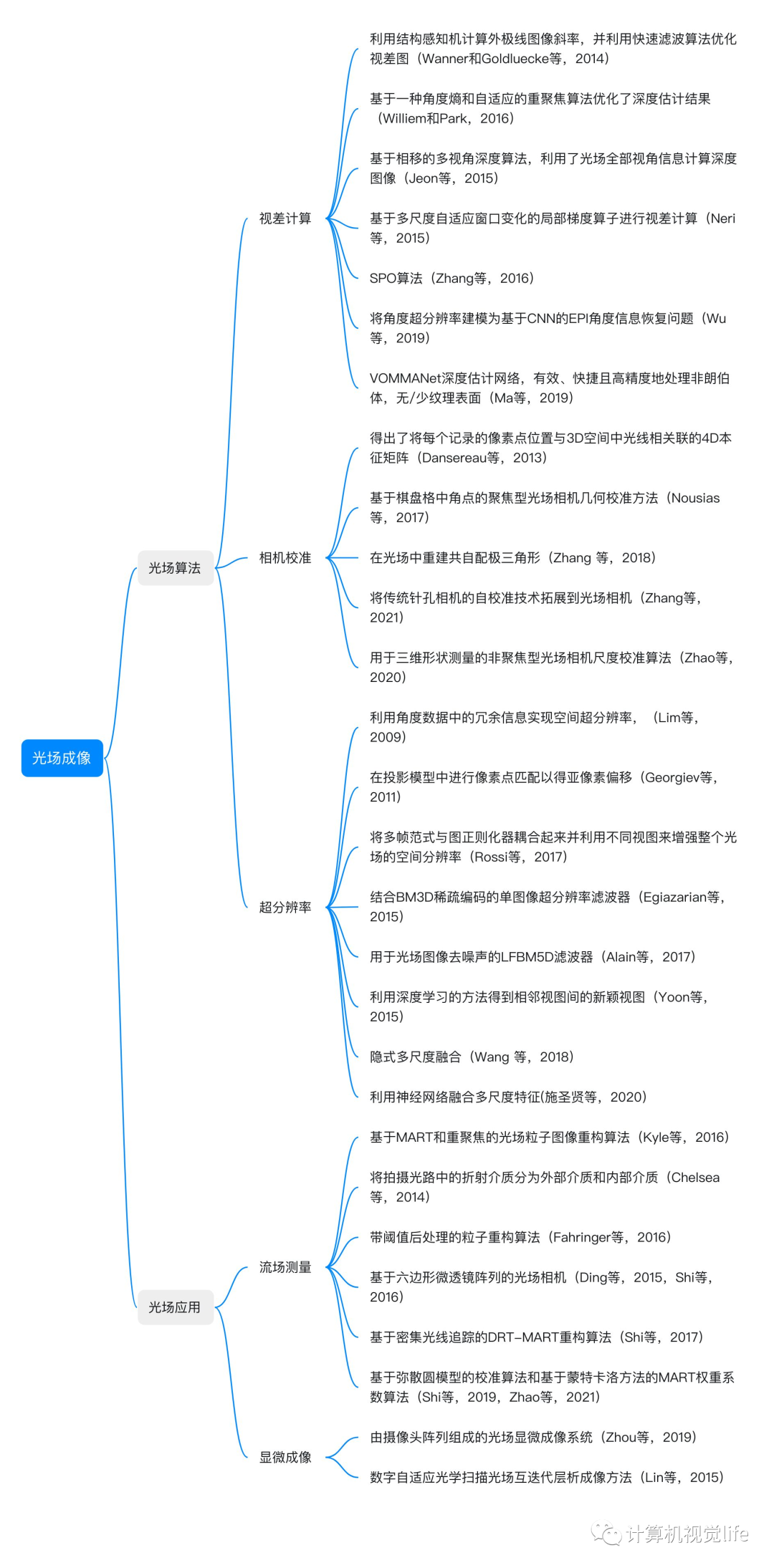

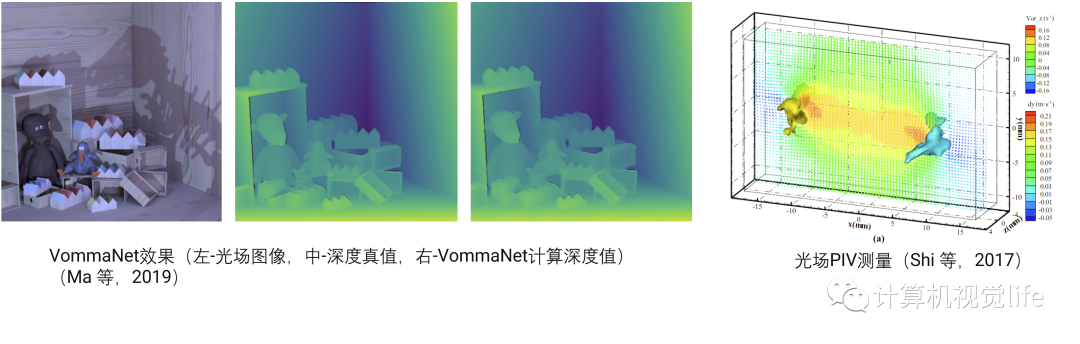

光场成像(light field imaging,LFI)能够同时记录光线的空间位置和角度信息,是三维测量的一种新方法。经过近些年的发展,逐渐成为一种新兴的非接触式测量技术,自从摄影被发明以来,图像捕捉就涉及在场景的二维投影中获取信息。然而,光场不仅提供二维投影,还增加了另一个维度,即到达该投影的光线的角度。光场拥有关于光阵列方向和场景二维投影的信息,并且可以实现不同的功能。例如,可以将投影移动到不同的焦距,这使用户能够在采集后自由地重新聚焦图像。此外,还可以更改捕获场景的视角。目前已逐渐应用于工业、虚拟现实、生命科学和三维流动测试等领域,帮助快速获得真实的光场信息和复杂三维空间信息。其技术路线如图4所示。

图4光场成像技术路线

图中所列参考文献(向上滑动即可查看全部)

·光场算法[1]Levoy M, Zhang Z, McDowall I.Recording and controlling the 4D light field in a microscope using microlens arrays[J].//Journal of microscopy, 2009, 235(2): 144-162.[2]Cheng Z, Xiong Z, Chen C, et al. Light Field Super-Resolution: A Benchmark[C] //Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 2019.[3]Lim J G, Ok H W, Park B K, et al. Improving the spatail resolution based on 4D light field data[C]//2009 16th IEEE International Conference on Image Processing (ICIP). IEEE, 2009: 1173-1176.[4]Georgiev T, Chunev G, Lumsdaine A.Superresolution with the focused plenoptic camera[C] //Computational Imaging IX.International Society for Optics and Photonics, 2011, 7873: 78730X.[5]Alain M, Smolic A.Light field super-resolution via LFBM5D sparse coding[C]//2018 25th IEEE international conference on image processing (ICIP).IEEE, 2018: 2501-2505.[6]Rossi M, Frossard P.Graph-based light field super-resolution[C]//2017 IEEE 19th International Workshop on Multimedia Signal Processing (MMSP).IEEE, 2017: 1-6.[7]Yoon Y, Jeon H G, Yoo D, et al. Learning a deep convolutional network for light-field image super-resolution[C]//Proceedings of the IEEE international conference on computer vision workshops. 2015: 24-32.[8]Goldluecke B.Globally consistent depth labeling of 4D light fields[C]// Computer Vision and Pattern Recognition.IEEE, 2012:41-48.[9]Wanner S, Goldluecke B.Variational Light Field Analysis for Disparity Estimation and Super-Resolution[J].//IEEE Transactions on Pattern Analysis & Machine Intelligence, 2014, 36(3):606-619.[10]Tao M W, Hadap S, Malik J, et al. Depth from Combining Defocus and Correspondence Using Light-Field Cameras[C] // IEEE International Conference on Computer Vision. IEEE, 2013:673-680.[11]Jeon H G, Park J, Choe G, et al. Accurate depth map estimation from a lenslet light field camera[C] // Computer Vision and Pattern Recognition. IEEE, 2015:1547-1555.[12]Neri A, Carli M, Battisti F.A multi-resolution approach to depth field estimation in dense image arrays[C] //IEEE International Conference on Image Processing.IEEE, 2015:3358-3362.[13]Strecke M, Alperovich A, Goldluecke B. Accurate Depth and Normal Maps from Occlusion-Aware Focal Stack Symmetry[C] //Computer Vision and Pattern Recognition. IEEE, 2017:2529-2537.[14]Dansereau D G, Pizarro O, Williams S B. Decoding, calibration and rectification for lenselet-based plenoptic cameras[C] //Proceedings of the IEEE conference on computer vision and pattern recognition. 2013: 1027-1034.[15]Nousias S, Chadebecq F, Pichat J, et al. Corner-based geometric calibration of multi-focus plenoptic cameras[C] //Proceedings of the IEEE International Conference on Computer Vision. 2017: 957-965.[16]Zhu H, Wang Q.Accurate disparity estimation in light field using ground control points[J].//Computational Visual Media, 2016, 2(2):1-9.[17]Zhang, S., Sheng, H., Li, C., Zhang, J.and Xiong, Z., 2016.Robust depth estimation for light field via spinning parallelogram operator.//Computer Vision and Image Understanding, 145, pp.148-159.[18]Zhang Y, Lv H, Liu Y, Wang H, Wang X, Huang Q, Xiang X, Dai Q.Light-field depth estimation via epipolar plane image analysis and locally linear embedding.IEEE Transactions on Circuits and Systems for Video Technology[J].2016, 27(4):739-47.[19]Ma H , Qian Z , Mu T , et al.Fast and Accurate 3D Measurement Based on Light-Field Camera and Deep Learning[J].//Sensors, 2019, 19(20):4399.·光场应用[1]Lin X, Wu J, Zheng G, Dai Q. 2015. Camera array based light field microscopy. Biomedical Optics Express, 6(9): 3179-89[2]Shi, S., Ding, J., New, T.H.and Soria, J., 2017.Light-field camera-based 3D volumetric particle image velocimetry with dense ray tracing reconstruction technique.//Experiments in Fluids, 58(7), pp.1-16.[3]Shi, S., Wang, J., Ding, J., Zhao, Z.and New, T.H., 2016.Parametric study on light field volumetric particle image velocimetry.Flow Measurement and Instrumentation, 49, pp.70-88.[4]Shi, S., Ding, J., Atkinson, C., Soria, J.and New, T.H., 2018.A detailed comparison of single-camera light-field PIV and tomographic PIV.Experiments in Fluids, 59(3), pp.1-13.[5]Shi, S., Ding, J., New, T.H., Liu, Y.and Zhang, H., 2019.Volumetric calibration enhancements for single-camera light-field PIV.Experiments in Fluids, 60(1), p.21.光谱成像(spectrum imaging)由传统彩色成像技术发展而来,能够获取目标物体的光谱信息。每个物体都有自己独特的光谱特征,就像每个人拥有不同的指纹一样,光谱也因此被视为目标识别的“指纹”信息。通过获取目标物体在连续窄波段内的光谱图像,组成空间维度和光谱维度的数据立方体信息,可以极大地增强目标识别和分析能力。光谱成像可作为科学研究、工程应用的强有力工具,已经广泛应用于军事、工业、民用等诸多领域,对促进社会经济发展和保障国家安全具有重要作用。例如,光谱成像对河流、沙土、植被、岩矿等地物都具有很好的识别效果,因此在精准农业、环境监控、资源勘查、食品安全等诸多方面都具有重要应用。特别地,光谱成像还有望用于手机、自动驾驶汽车等终端。当前,光谱成像已成为计算机视觉和图形学研究的热点方向之一。

无透镜成像(lensless imaging)技术为进一步压缩成像系统的尺寸提供了一种全新的思路(Boominathan等,2022)。传统的成像系统依赖点对点的成像模式,其系统极限尺寸仍受限于透镜的焦距、孔径、视场等核心指标。无透镜成像摒弃了传统透镜中点对点的映射模式,而是将物空间的点投影为像空间的特定图案,不同物点在像面叠加编码,形成了一种人眼无法识别,但计算算法可以通过解码复原图像信息。其在紧凑性方面具有极强的竞争力,而且随着解码算法的发展,其成像分辨率也得到大大提升。因此,在可穿戴相机、便携式显微镜、内窥镜、物联网等应用领域极具发展潜力。另外,其独特的光学加密功能,能够对目标中敏感的生物识别特征进行有效保护,在隐私保护的人工智能成像方面也具有重要意义。

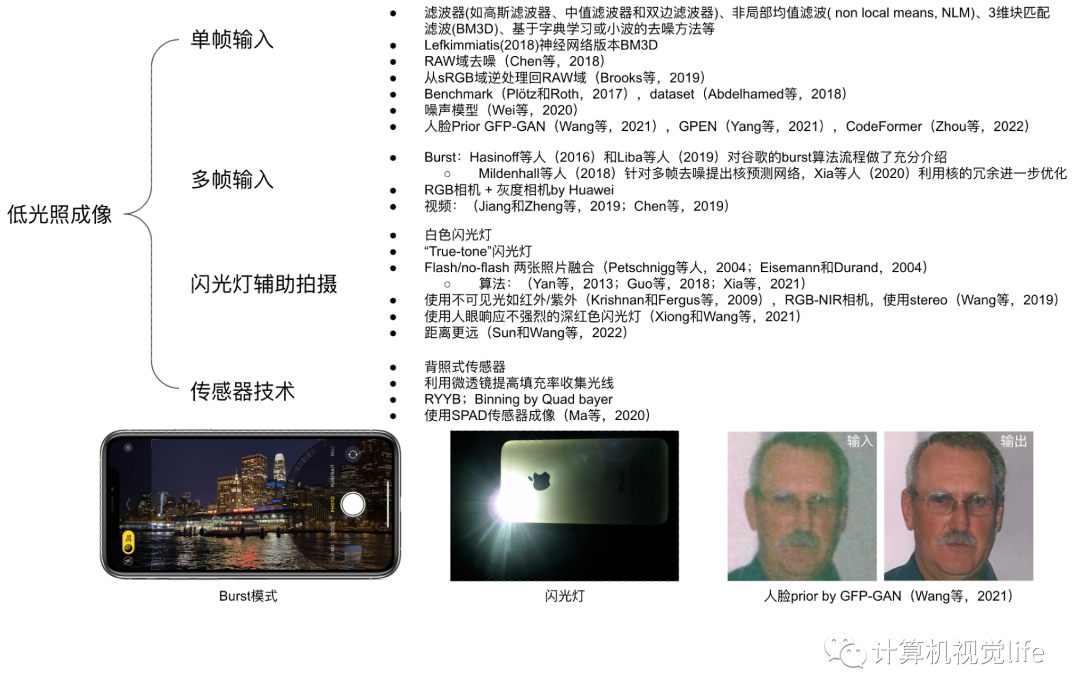

低光照成像(low light imaging)也是计算摄影里的研究热点一。手机摄影已经成为了人们用来记录生活的最常用的方式之一,手机的摄像功能也是每次发布会的看点,夜景模式也成了各大手机厂商争夺的技术制高点。不同手机的相机在白天的强光环境下拍照差异并不明显,然而在夜晚弱光情况下则差距明显。其原因是,成像依赖于镜头收集物体发出的光子,且传感器由光电转换、增益、模数转换一系列过程会有不可避免的噪声;白天光线充足,信号的信噪比高,成像质量很高;晚上光线微弱,信号的信噪比下降数个数量级,成像质量低;部分手机搭载使用计算摄影算法的夜景模式,比如基于单帧、多帧、RYYB阵列等的去噪,有效地提高了照片的质量。但目前依旧有很大的提升空间。低光照成像按照输入分类可以分为单帧输入、多帧输入( burst imaging)、 闪光灯辅助拍摄和传感器技术,技术路线如图2所示。技术路线如图5所示。

图5低光照成像技术路线

图中所列参考文献(向上滑动即可查看全部)·单帧输入

[1]Lefkimmiatis, S., 2018. Universal denoising networks: a novel CNN architecture for image denoising. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3204-3213).

[2]Chen, C., Chen, Q., Xu, J. and Koltun, V., 2018. Learning to see in the dark. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3291-3300)

[3]Brooks, T., Mildenhall, B., Xue, T., Chen, J., Sharlet, D. and Barron, J.T., 2019. Unprocessing images for learned raw denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 11036-11045).

[4]Plotz, T. and Roth, S., 2017. Benchmarking denoising algorithms with real photographs. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1586-1595).

[5]Abdelhamed, A., Lin, S. and Brown, M.S., 2018. A high-quality denoising dataset for smartphone cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1692-1700).

[6]Wei, K., Fu, Y., Yang, J. and Huang, H., 2020. A physics-based noise formation model for extreme low-light raw denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2758-2767).

[7]Wang, X., Li, Y., Zhang, H. and Shan, Y., 2021. Towards real-world blind face restoration with generative facial prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 9168-9178).

[8]Yang, T., Ren, P., Xie, X. and Zhang, L., 2021. Gan prior embedded network for blind face restoration in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 672-681).

[9]Zhou, S., Chan, K.C., Li, C. and Loy, C.C., 2022. Towards Robust Blind Face Restoration with Codebook Lookup Transformer. arXiv preprint arXiv:2206.11253.

·多帧输入

[1]Hasinoff, S.W., Sharlet, D., Geiss, R., Adams, A., Barron, J.T., Kainz, F., Chen, J. and Levoy, M., 2016. Burst photography for high dynamic range and low-light imaging on mobile cameras. ACM Transactions on Graphics (ToG), 35(6), pp.1-12.

[2]Liba, O., Murthy, K., Tsai, Y.T., Brooks, T., Xue, T., Karnad, N., He, Q., Barron, J.T., Sharlet, D., Geiss, R. and Hasinoff, S.W., 2019. Handheld mobile photography in very low light. ACM Trans. Graph., 38(6), pp.164-1.

[3]Mildenhall, B., Barron, J.T., Chen, J., Sharlet, D., Ng, R. and Carroll, R., 2018. Burst denoising with kernel prediction networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2502-2510).

[4]Xia, Z., Perazzi, F., Gharbi, M., Sunkavalli, K. and Chakrabarti, A., 2020. Basis prediction networks for effective burst denoising with large kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 11844-11853).

[5]Jiang, H. and Zheng, Y., 2019. Learning to see moving objects in the dark. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 7324-7333).

[6]Chen, C., Chen, Q., Do, M.N. and Koltun, V., 2019. Seeing motion in the dark. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 3185-3194).

·闪光灯

[1]Eisemann, E. and Durand, F., 2004. Flash photography enhancement via intrinsic relighting. ACM transactions on graphics (TOG), 23(3), pp.673-678.

[2]Petschnigg, G., Szeliski, R., Agrawala, M., Cohen, M., Hoppe, H. and Toyama, K., 2004. Digital photography with flash and no-flash image pairs. ACM transactions on graphics (TOG), 23(3), pp.664-672.

[3]Yan, Q., Shen, X., Xu, L., Zhuo, S., Zhang, X., Shen, L. and Jia, J., 2013. Cross-field joint image restoration via scale map. In Proceedings of the IEEE International Conference on Computer Vision (pp. 1537-1544).

[4]Guo, X., Li, Y., Ma, J. and Ling, H., 2018. Mutually guided image filtering. IEEE transactions on pattern analysis and machine intelligence, 42(3), pp.694-707.

[5]Xia, Z., Gharbi, M., Perazzi, F., Sunkavalli, K. and Chakrabarti, A., 2021. Deep Denoising of Flash and No-Flash Pairs for Photography in Low-Light Environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2063-2072).

[6]Krishnan, D. and Fergus, R., 2009. Dark flash photography. ACM Trans. Graph., 28(3), p.96.

[7]Wang, J., Xue, T., Barron, J.T. and Chen, J., 2019, May. Stereoscopic dark flash for low-light photography. In 2019 IEEE International Conference on Computational Photography (ICCP) (pp. 1-10). IEEE.

[8]Xiong, J., Wang, J., Heidrich, W. and Nayar, S., 2021. Seeing in extra darkness using a deep-red flash. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 10000-10009).

[9]Sun, Z., Wang, J., Wu, Y. and Nayar, S., 2022. Seeing Far in the Dark with Patterned Flash, In European Conference on Computer Vision. Springer

·传感器

[1]Ma, S., Gupta, S., Ulku, A.C., Bruschini, C., Charbon, E. and Gupta, M., 2020. Quanta burst photography. ACM Transactions on Graphics (TOG), 39(4), pp.79-1.

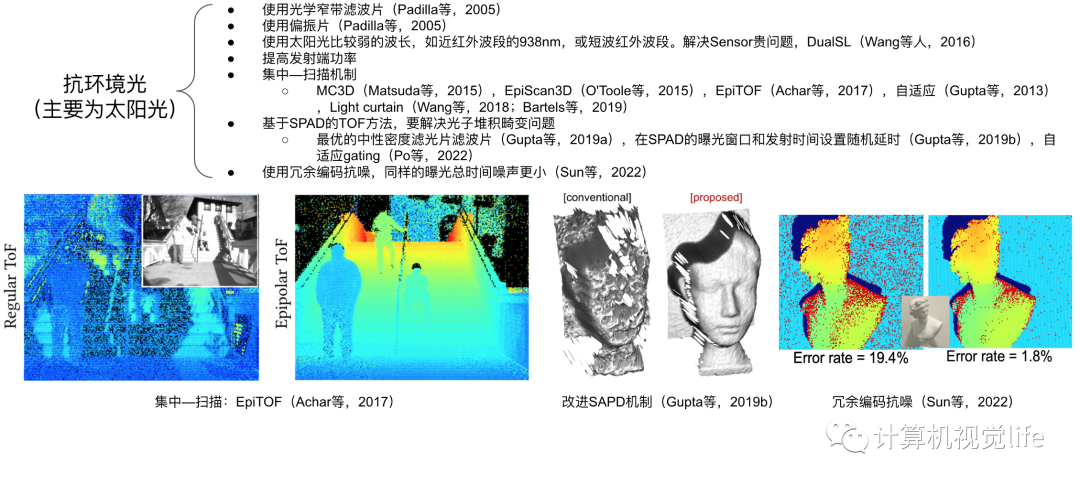

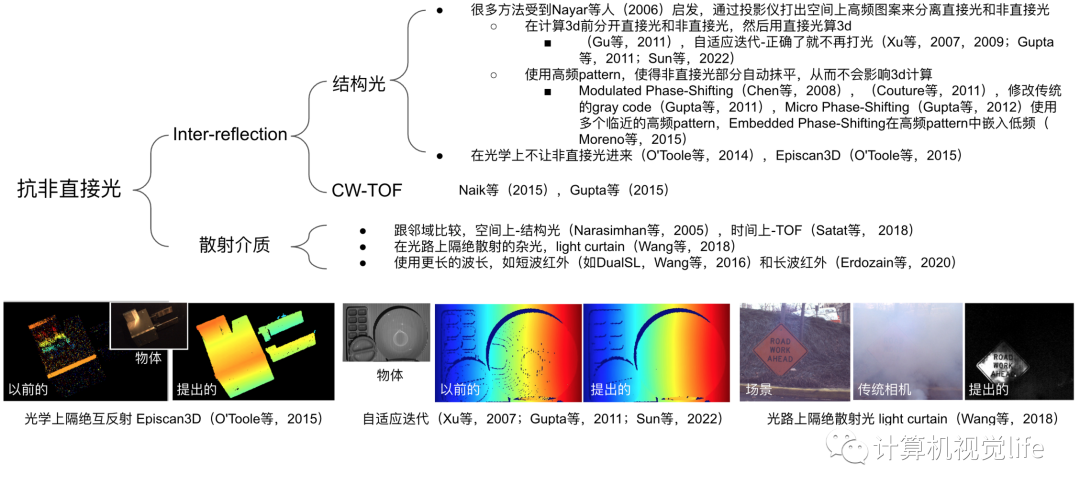

主动三维成像(active 3D imaging)以获取物体或场景的点云为目的,被动方法以双目立体匹配为代表,但难以解决无纹理区域和有重复纹理区域的深度。主动光方法一般更为鲁棒,能够在暗处工作,且能够得到稠密的、精确的点云。主动光方法根据使用的光的性质可分为基于光的直线传播如结构光,基于光速如Time-of-fligt(TOF),包括连续波TOF(iTOF)和直接TOF(dTOF),和基于光的波的性质如干涉仪,其中前两种方法的主动三维成像已广泛使用在人们的日常生活中。虽然主动方法通过打光的方式提高了准确性,但也存在由于环境光(主要是太阳光)、多路径干扰(又称做非直接光干扰)引起的问题,这些都在近些年的研究过程中有了很大的进展,如图6和图7所示。

图6抗环境光技术路线

图中所列参考文献(向上滑动即可查看全部)[1]Padilla, D.D. and Davidson, P., 2005. Advancements in sensing and perception using structured lighting techniques: An ldrd final report.

[2]Wang, J., Sankaranarayanan, A.C., Gupta, M. and Narasimhan, S.G., 2016, October. Dual structured light 3d using a 1d sensor. In European Conference on Computer Vision (pp. 383-398). Springer

[3]Matsuda, N., Cossairt, O. and Gupta, M., 2015, April. Mc3d: Motion contrast 3d scanning. In 2015 IEEE International Conference on Computational Photography (ICCP) (pp. 1-10). IEEE.

[4]O'Toole, M., Achar, S., Narasimhan, S.G. and Kutulakos, K.N., 2015. Homogeneous codes for energy-efficient illumination and imaging. ACM Transactions on Graphics (ToG), 34(4), pp.1-13.

[5]Supreeth Achar, Joseph R. Bartels, William L. ‘Red’ Whittaker, Kiriakos N. Kutulakos, Srinivasa G. Narasimhan. 2017, "Epipolar Time-of-Flight Imaging", ACM SIGGRAPH

[6]Gupta, M., Yin, Q. and Nayar, S.K., 2013. Structured light in sunlight. In Proceedings of the IEEE International Conference on Computer Vision (pp. 545-552).

[7]Wang, J., Bartels, J., Whittaker, W., Sankaranarayanan, A.C. and Narasimhan, S.G., 2018. Programmable triangulation light curtains. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 19-34).

[8]Bartels, J.R., Wang, J., Whittaker, W. and Narasimhan, S.G., 2019. Agile depth sensing using triangulation light curtains. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 7900-7908).

[9]Gupta, A., Ingle, A., Velten, A. and Gupta, M., 2019. Photon-flooded single-photon 3D cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 6770-6779).

[10]Gupta, A., Ingle, A. and Gupta, M., 2019. Asynchronous single-photon 3D imaging. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 7909-7918).

[11]Po, R., Pediredla, A. and Gkioulekas, I., 2022. Adaptive Gating for Single-Photon 3D Imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 16354-16363).

[12]Sun, Z., Zhang, Y., Wu, Y., Huo, D., Qian, Y. and Wang, J., 2022. Structured Light with Redundancy Codes. arXiv preprint arXiv:2206.09243.

图7抗非直接光技术路线

图中所列参考文献(向上滑动即可查看全部)[1]Nayar, S.K., Krishnan, G., Grossberg, M.D. and Raskar, R., 2006. Fast separation of direct and global components of a scene using high frequency illumination. In ACM SIGGRAPH 2006 Papers (pp. 935-944).

[2]Gu, J., Kobayashi, T., Gupta, M. and Nayar, S.K., 2011, November. Multiplexed illumination for scene recovery in the presence of global illumination. In 2011 International Conference on Computer Vision (pp. 691-698). IEEE.

[3]Xu, Y. and Aliaga, D.G., 2007, May. Robust pixel classification for 3d modeling with structured light. In Proceedings of Graphics Interface 2007 (pp. 233-240).

[4]Xu, Y. and Aliaga, D.G., 2009. An adaptive correspondence algorithm for modeling scenes with strong interreflections. IEEE Transactions on Visualization and Computer Graphics, 15(3), pp.465-480.

[5]Gupta, M., Agrawal, A., Veeraraghavan, A. and Narasimhan, S.G., 2011, June. Structured light 3D scanning in the presence of global illumination. In CVPR 2011 (pp. 713-720). IEEE.

[6]Sun, Z., Zhang, Y., Wu, Y., Huo, D., Qian, Y. and Wang, J., 2022. Structured Light with Redundancy Codes. arXiv preprint arXiv:2206.09243.

[7]Chen, T., Seidel, H.P. and Lensch, H.P., 2008, June. Modulated phase-shifting for 3D scanning. In 2008 IEEE Conference on Computer Vision and Pattern Recognition (pp. 1-8). IEEE.

[8]Couture, V., Martin, N. and Roy, S., 2011, November. Unstructured light scanning to overcome interreflections. In 2011 International Conference on Computer Vision (pp. 1895-1902). IEEE.

[9]Gupta, M. and Nayar, S.K., 2012, June. Micro phase shifting. In 2012 IEEE Conference on Computer Vision and Pattern Recognition (pp. 813-820). IEEE.

[10]Moreno, D., Son, K. and Taubin, G., 2015. Embedded phase shifting: Robust phase shifting with embedded signals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2301-2309).

[11]O'Toole, M., Mather, J. and Kutulakos, K.N., 2014. 3d shape and indirect appearance by structured light transport. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 3246-3253).

[12]O'Toole, M., Achar, S., Narasimhan, S.G. and Kutulakos, K.N., 2015. Homogeneous codes for energy-efficient illumination and imaging. ACM Transactions on Graphics (ToG), 34(4), pp.1-13.

[13]Wang, J., Bartels, J., Whittaker, W., Sankaranarayanan, A.C. and Narasimhan, S.G., 2018. Programmable triangulation light curtains. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 19-34).

[14]Naik, N., Kadambi, A., Rhemann, C., Izadi, S., Raskar, R. and Bing Kang, S., 2015. A light transport model for mitigating multipath interference in time-of-flight sensors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 73-81).

[15]Gupta, M., Nayar, S.K., Hullin, M.B. and Martin, J., 2015. Phasor imaging: A generalization of correlation-based time-of-flight imaging. ACM Transactions on Graphics (ToG), 34(5), pp.1-18.

[16]Narasimhan, S.G., Nayar, S.K., Sun, B. and Koppal, S.J., 2005, October. Structured light in scattering media. In Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1 (Vol. 1, pp. 420-427). IEEE.

[17]Satat, G., Tancik, M. and Raskar, R., 2018, May. Towards photography through realistic fog. In 2018 IEEE International Conference on Computational Photography (ICCP) (pp. 1-10). IEEE.

[18]Wang, J., Sankaranarayanan, A.C., Gupta, M. and Narasimhan, S.G., 2016, October. Dual structured light 3d using a 1d sensor. In European Conference on Computer Vision (pp. 383-398). Springer.

[19]Erdozain, J., Ichimaru, K., Maeda, T., Kawasaki, H., Raskar, R. and Kadambi, A., 2020, October. 3d Imaging For Thermal Cameras Using Structured Light. In 2020 IEEE International Conference on Image Processing (ICIP) (pp. 2795-2799). IEEE.

计算摄影学(computational photography)是计算成像的一个分支学科,它从传统摄影学发展而来。传统摄影学主要着眼于使用光学器件更好地进行成像,如佳能、索尼等相机厂商对于镜头的研究;与之相比,计算摄影学则更侧重于使用数字计算的方式进行图像拍摄。在过去10年中,随着移动端设备计算能力的迅速发展,手机摄影逐渐成为了计算摄影学研究的主要方向:在光学镜片的物理尺寸、成像质量受限的情况下,如何使用合理的计算资源,绘制出用户最满意的图像。计算摄影学在近年来得到了长足的发展,其研究问题的范围也所有扩展,如:夜空摄影、人脸重光照、照片自动美化等。受图像的算法,其中重点介绍:自动白平衡、自动对焦、人工景深模拟以及连拍摄影。篇幅所限,本报告中仅介绍目标为还原拍摄真实场景的真实信息的相关研究。

审核编辑 :李倩

-

算法

+关注

关注

23文章

4615浏览量

92999 -

光学

+关注

关注

3文章

753浏览量

36293 -

成像系统

+关注

关注

2文章

196浏览量

13941

发布评论请先 登录

相关推荐

浅谈生物传感技术的定义、发展现状与未来

医疗机器人发展现状与趋势

工控机厂家发展现状及未来趋势

《RISC-V产业年鉴2023》发布,洞察产业发展现状与趋势

反无人机系统发展现状:应对无人机入侵威胁的利器|特信电子

当前全光网的发展现状与应用情况

万兆电口模块的产业发展现状与前景展望

工程振弦采集仪监测技术的发展现状与展望

三坐标测量机发展现状以及三坐标国产化的意义

区块链技术发展现状和趋势

乘用车一体化电池的发展现状和未来趋势

全面阐述当前计算成像发展现状

全面阐述当前计算成像发展现状

评论