一、概述

-

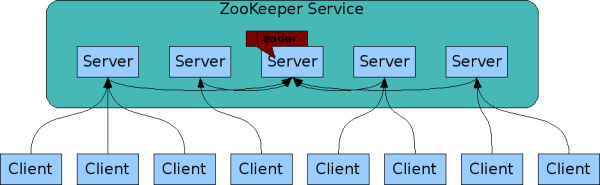

Apache ZooKeeper是一个集中式服务,用于维护配置信息、命名、提供分布式同步和提供组服务,ZooKeeper 致力于开发和维护一个开源服务器,以实现高度可靠的分布式协调,其实也可以认为就是一个分布式数据库,只是结构比较特殊,是树状结构。官网文档:

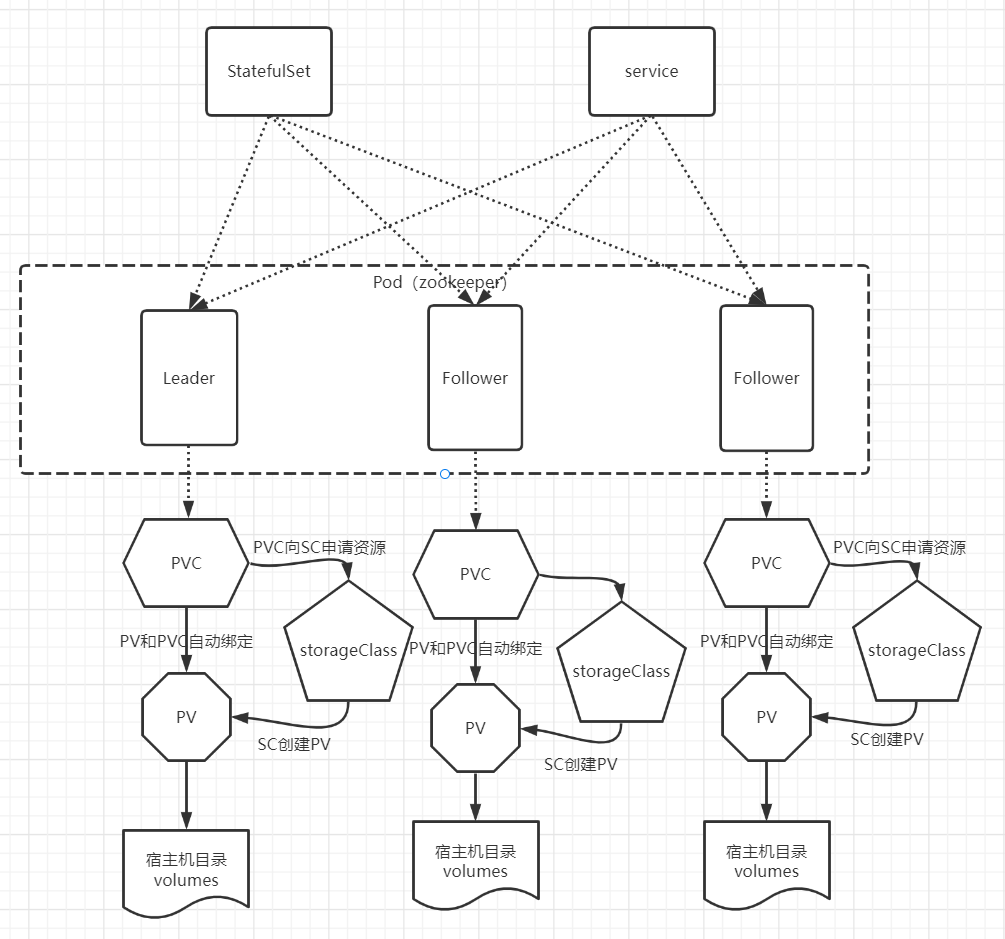

二、Zookeeper on k8s 部署

1)添加源

部署包地址:

helmrepoaddbitnamihttps://charts.bitnami.com/bitnami

helmpullbitnami/zookeeper

tar-xfzookeeper-10.2.1.tgz

2)修改配置

-

修改

zookeeper/values.yaml

image:

registry:myharbor.com

repository:bigdata/zookeeper

tag:3.8.0-debian-11-r36

...

replicaCount:3

...

service:

type:NodePort

nodePorts:

#NodePort默认范围是30000-32767

client:"32181"

tls:"32182"

...

persistence:

storageClass:"zookeeper-local-storage"

size:"10Gi"

#目录需要提前在宿主机上创建

local:

-name:zookeeper-0

host:"local-168-182-110"

path:"/opt/bigdata/servers/zookeeper/data/data1"

-name:zookeeper-1

host:"local-168-182-111"

path:"/opt/bigdata/servers/zookeeper/data/data1"

-name:zookeeper-2

host:"local-168-182-112"

path:"/opt/bigdata/servers/zookeeper/data/data1"

...

#EnablePrometheustoaccessZooKeepermetricsendpoint

metrics:

enabled:true

-

添加

zookeeper/templates/pv.yaml

{{-range.Values.persistence.local}}

---

apiVersion:v1

kind:PersistentVolume

metadata:

name:{{.name}}

labels:

name:{{.name}}

spec:

storageClassName:{{$.Values.persistence.storageClass}}

capacity:

storage:{{$.Values.persistence.size}}

accessModes:

-ReadWriteOnce

local:

path:{{.path}}

nodeAffinity:

required:

nodeSelectorTerms:

-matchExpressions:

-key:kubernetes.io/hostname

operator:In

values:

-{{.host}}

---

{{-end}}

-

添加

zookeeper/templates/storage-class.yaml

kind:StorageClass

apiVersion:storage.k8s.io/v1

metadata:

name:{{.Values.persistence.storageClass}}

provisioner:kubernetes.io/no-provisioner

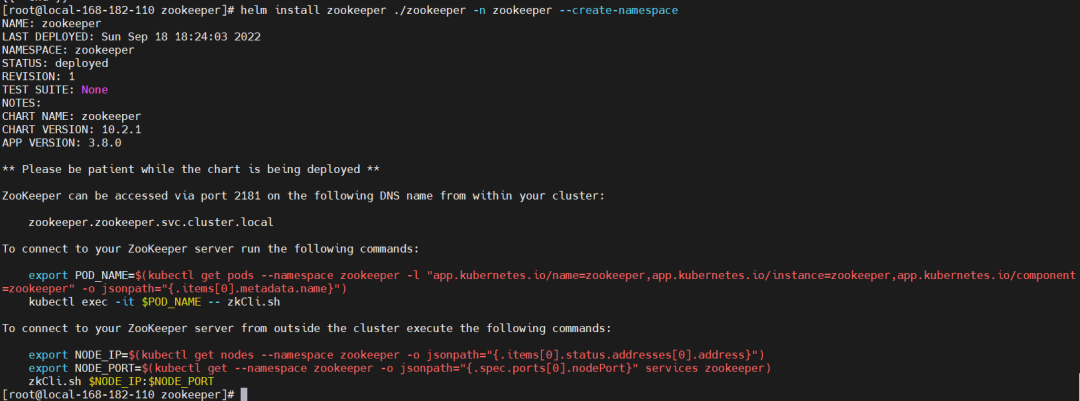

3)开始安装

#先准备好镜像

dockerpulldocker.io/bitnami/zookeeper:3.8.0-debian-11-r36

dockertagdocker.io/bitnami/zookeeper:3.8.0-debian-11-r36myharbor.com/bigdata/zookeeper:3.8.0-debian-11-r36

dockerpushmyharbor.com/bigdata/zookeeper:3.8.0-debian-11-r36

#开始安装

helminstallzookeeper./zookeeper-nzookeeper--create-namespace

NOTES

NAME:zookeeper

LASTDEPLOYED:SunSep1818032022

NAMESPACE:zookeeper

STATUS:deployed

REVISION:1

TESTSUITE:None

NOTES:

CHARTNAME:zookeeper

CHARTVERSION:10.2.1

APPVERSION:3.8.0

**Pleasebepatientwhilethechartisbeingdeployed**

ZooKeepercanbeaccessedviaport2181onthefollowingDNSnamefromwithinyourcluster:

zookeeper.zookeeper.svc.cluster.local

ToconnecttoyourZooKeeperserverrunthefollowingcommands:

exportPOD_NAME=$(kubectlgetpods--namespacezookeeper-l"app.kubernetes.io/name=zookeeper,app.kubernetes.io/instance=zookeeper,app.kubernetes.io/component=zookeeper"-ojsonpath="{.items[0].metadata.name}")

kubectlexec-it$POD_NAME--zkCli.sh

ToconnecttoyourZooKeeperserverfromoutsidetheclusterexecutethefollowingcommands:

exportNODE_IP=$(kubectlgetnodes--namespacezookeeper-ojsonpath="{.items[0].status.addresses[0].address}")

exportNODE_PORT=$(kubectlget--namespacezookeeper-ojsonpath="{.spec.ports[0].nodePort}"serviceszookeeper)

zkCli.sh$NODE_IP:$NODE_PORT

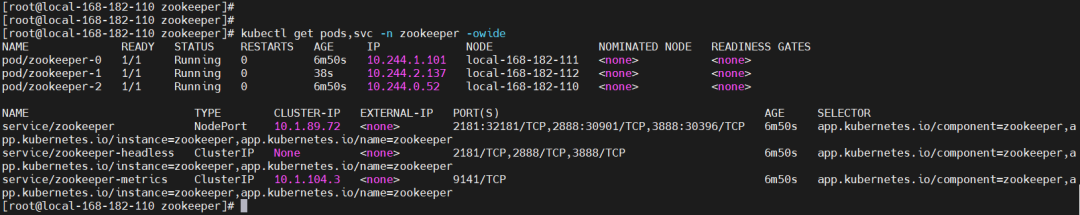

查看 pod 状态

kubectlgetpods,svc-nzookeeper-owide

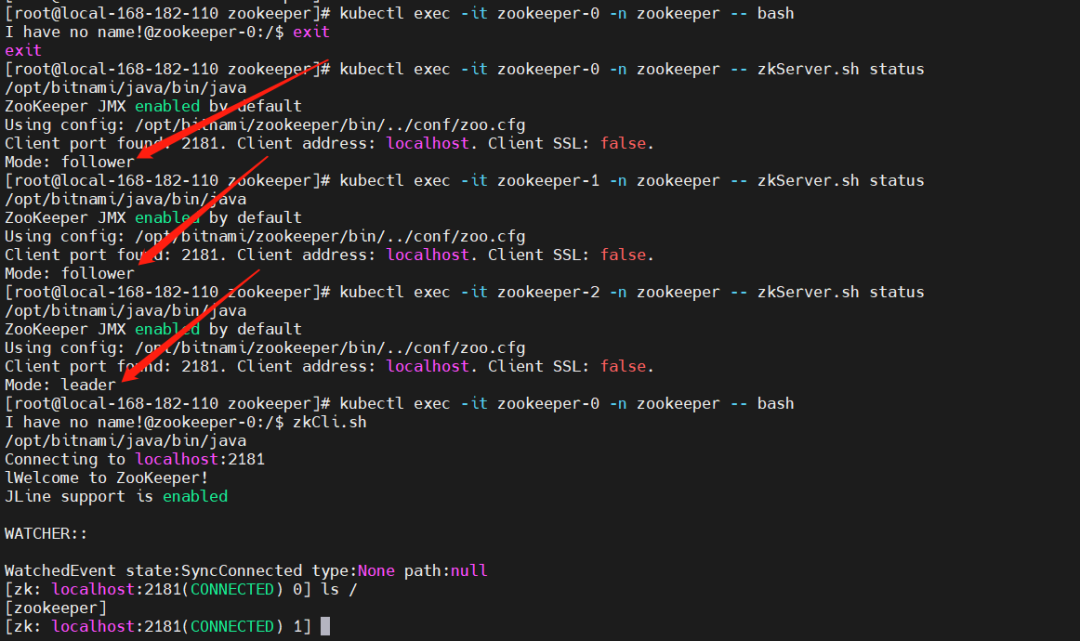

4)测试验证

#登录zookeeperpod

kubectlexec-itzookeeper-0-nzookeeper--zkServer.shstatus

kubectlexec-itzookeeper-1-nzookeeper--zkServer.shstatus

kubectlexec-itzookeeper-2-nzookeeper--zkServer.shstatus

kubectlexec-itzookeeper-0-nzookeeper--bash

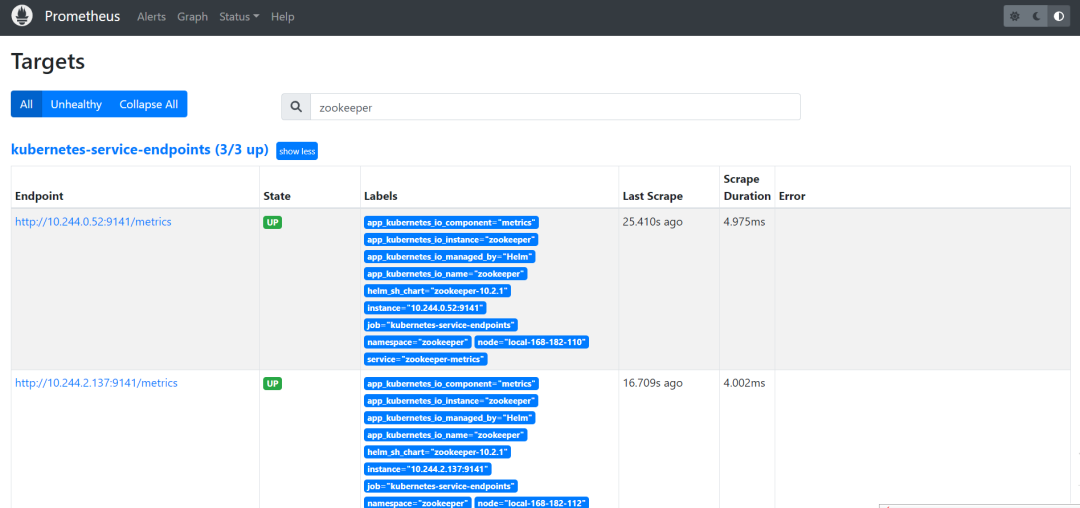

5)Prometheus 监控

Prometheus:https://prometheus.k8s.local/targets?search=zookeeper

可以通过命令查看采集数据

kubectlget--rawhttp://10.244.0.52:9141/metrics

kubectlget--rawhttp://10.244.1.101:9141/metrics

kubectlget--rawhttp://10.244.2.137:9141/metrics

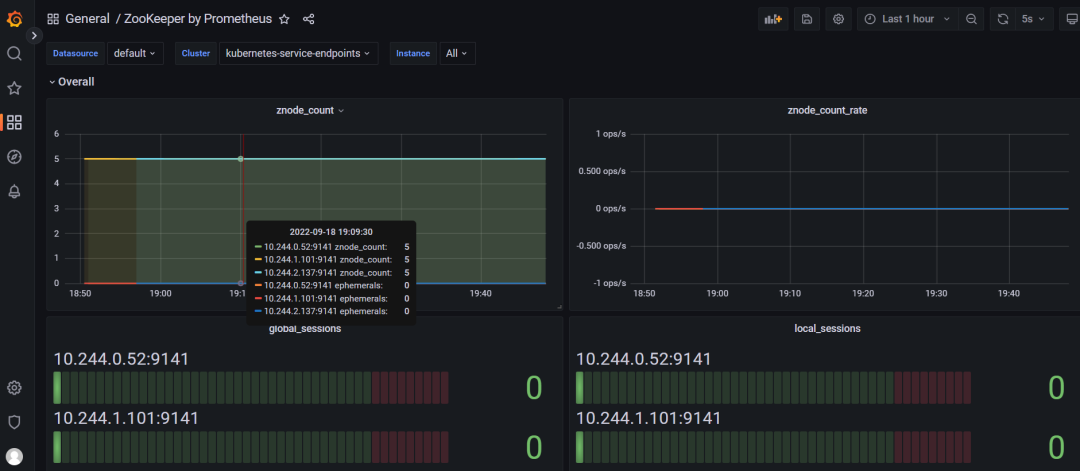

Grafana:https://grafana.k8s.local/

账号:admin,密码通过下面命令获取

kubectlgetsecret--namespacegrafanagrafana-ojsonpath="{.data.admin-password}"|base64--decode;echo

导入 grafana 模板,集群资源监控:10465

官方模块下载地址:

6)卸载

helmuninstallzookeeper-nzookeeper

kubectldeletepod-nzookeeper`kubectlgetpod-nzookeeper|awk'NR>1{print$1}'`--force

kubectlpatchnszookeeper-p'{"metadata":{"finalizers":null}}'

kubectldeletenszookeeper--force

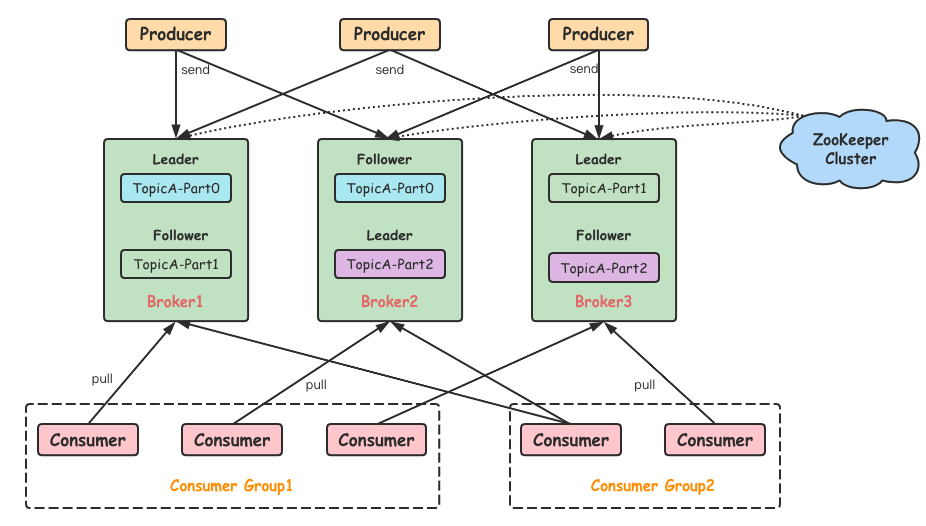

三、Kafka on k8s 部署

1)添加源

部署包地址:

helmrepoaddbitnamihttps://charts.bitnami.com/bitnami

helmpullbitnami/kafka

tar-xfkafka-18.4.2.tgz

2)修改配置

-

修改

kafka/values.yaml

image:

registry:myharbor.com

repository:bigdata/kafka

tag:3.2.1-debian-11-r16

...

replicaCount:3

...

service:

type:NodePort

nodePorts:

client:"30092"

external:"30094"

...

externalAccess

enabled:true

service:

type:NodePort

nodePorts:

-30001

-30002

-30003

useHostIPs:true

...

persistence:

storageClass:"kafka-local-storage"

size:"10Gi"

#目录需要提前在宿主机上创建

local:

-name:kafka-0

host:"local-168-182-110"

path:"/opt/bigdata/servers/kafka/data/data1"

-name:kafka-1

host:"local-168-182-111"

path:"/opt/bigdata/servers/kafka/data/data1"

-name:kafka-2

host:"local-168-182-112"

path:"/opt/bigdata/servers/kafka/data/data1"

...

metrics:

kafka:

enabled:true

image:

registry:myharbor.com

repository:bigdata/kafka-exporter

tag:1.6.0-debian-11-r8

jmx:

enabled:true

image:

registry:myharbor.com

repository:bigdata/jmx-exporter

tag:0.17.1-debian-11-r1

annotations:

prometheus.io/path:"/metrics"

...

zookeeper:

enabled:false

...

externalZookeeper

servers:

-zookeeper-0.zookeeper-headless.zookeeper

-zookeeper-1.zookeeper-headless.zookeeper

-zookeeper-2.zookeeper-headless.zookeeper

-

添加

kafka/templates/pv.yaml

{{-range.Values.persistence.local}}

---

apiVersion:v1

kind:PersistentVolume

metadata:

name:{{.name}}

labels:

name:{{.name}}

spec:

storageClassName:{{$.Values.persistence.storageClass}}

capacity:

storage:{{$.Values.persistence.size}}

accessModes:

-ReadWriteOnce

local:

path:{{.path}}

nodeAffinity:

required:

nodeSelectorTerms:

-matchExpressions:

-key:kubernetes.io/hostname

operator:In

values:

-{{.host}}

---

{{-end}}

-

添加

kafka/templates/storage-class.yaml

kind:StorageClass

apiVersion:storage.k8s.io/v1

metadata:

name:{{.Values.persistence.storageClass}}

provisioner:kubernetes.io/no-provisioner

3)开始安装

#先准备好镜像

dockerpulldocker.io/bitnami/kafka:3.2.1-debian-11-r16

dockertagdocker.io/bitnami/kafka:3.2.1-debian-11-r16myharbor.com/bigdata/kafka:3.2.1-debian-11-r16

dockerpushmyharbor.com/bigdata/kafka:3.2.1-debian-11-r16

#node-export

dockerpulldocker.io/bitnami/kafka-exporter:1.6.0-debian-11-r8

dockertagdocker.io/bitnami/kafka-exporter:1.6.0-debian-11-r8myharbor.com/bigdata/kafka-exporter:1.6.0-debian-11-r8

dockerpushmyharbor.com/bigdata/kafka-exporter:1.6.0-debian-11-r8

#JXM

docker.io/bitnami/jmx-exporter:0.17.1-debian-11-r1

dockertagdocker.io/bitnami/jmx-exporter:0.17.1-debian-11-r1myharbor.com/bigdata/jmx-exporter:0.17.1-debian-11-r1

dockerpushmyharbor.com/bigdata/jmx-exporter:0.17.1-debian-11-r1

#开始安装

helminstallkafka./kafka-nkafka--create-namespace

NAME:kafka

LASTDEPLOYED:SunSep1820022022

NAMESPACE:kafka

STATUS:deployed

REVISION:1

TESTSUITE:None

NOTES:

CHARTNAME:kafka

CHARTVERSION:18.4.2

APPVERSION:3.2.1

---------------------------------------------------------------------------------------------

WARNING

Byspecifying"serviceType=LoadBalancer"andnotconfiguringtheauthentication

youhavemostlikelyexposedtheKafkaserviceexternallywithoutany

authenticationmechanism.

Forsecurityreasons,westronglysuggestthatyouswitchto"ClusterIP"or

"NodePort".Asalternative,youcanalsoconfiguretheKafkaauthentication.

---------------------------------------------------------------------------------------------

**Pleasebepatientwhilethechartisbeingdeployed**

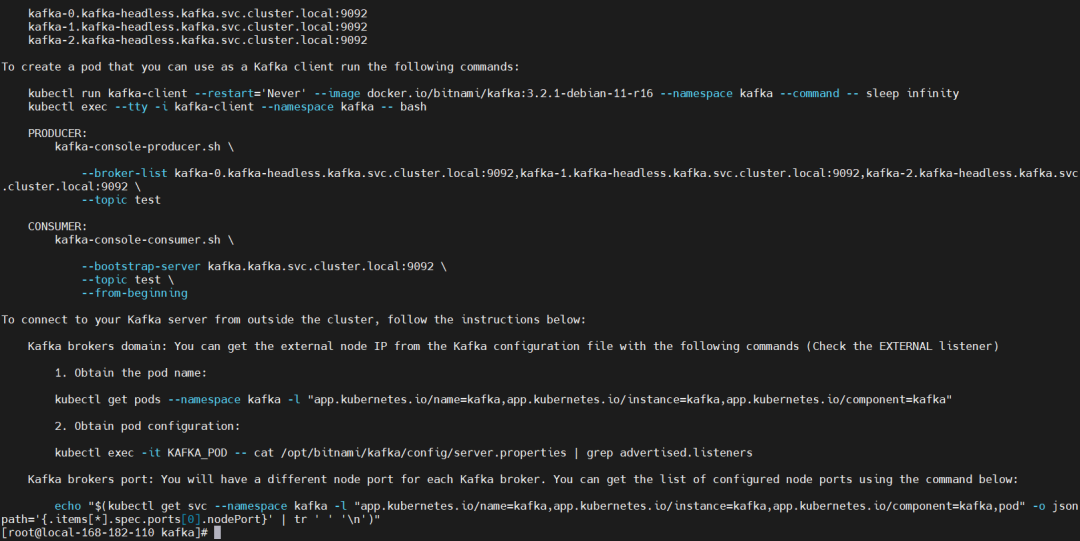

Kafkacanbeaccessedbyconsumersviaport9092onthefollowingDNSnamefromwithinyourcluster:

kafka.kafka.svc.cluster.local

EachKafkabrokercanbeaccessedbyproducersviaport9092onthefollowingDNSname(s)fromwithinyourcluster:

kafka-0.kafka-headless.kafka.svc.cluster.local:9092

kafka-1.kafka-headless.kafka.svc.cluster.local:9092

kafka-2.kafka-headless.kafka.svc.cluster.local:9092

TocreateapodthatyoucanuseasaKafkaclientrunthefollowingcommands:

kubectlrunkafka-client--restart='Never'--imagedocker.io/bitnami/kafka:3.2.1-debian-11-r16--namespacekafka--command--sleepinfinity

kubectlexec--tty-ikafka-client--namespacekafka--bash

PRODUCER:

kafka-console-producer.sh

--broker-listkafka-0.kafka-headless.kafka.svc.cluster.local:9092,kafka-1.kafka-headless.kafka.svc.cluster.local:9092,kafka-2.kafka-headless.kafka.svc.cluster.local:9092

--topictest

CONSUMER:

kafka-console-consumer.sh

--bootstrap-serverkafka.kafka.svc.cluster.local:9092

--topictest

--from-beginning

ToconnecttoyourKafkaserverfromoutsidethecluster,followtheinstructionsbelow:

Kafkabrokersdomain:YoucangettheexternalnodeIPfromtheKafkaconfigurationfilewiththefollowingcommands(ChecktheEXTERNALlistener)

1.Obtainthepodname:

kubectlgetpods--namespacekafka-l"app.kubernetes.io/name=kafka,app.kubernetes.io/instance=kafka,app.kubernetes.io/component=kafka"

2.Obtainpodconfiguration:

kubectlexec-itKAFKA_POD--cat/opt/bitnami/kafka/config/server.properties|grepadvertised.listeners

Kafkabrokersport:YouwillhaveadifferentnodeportforeachKafkabroker.Youcangetthelistofconfigurednodeportsusingthecommandbelow:

echo"$(kubectlgetsvc--namespacekafka-l"app.kubernetes.io/name=kafka,app.kubernetes.io/instance=kafka,app.kubernetes.io/component=kafka,pod"-ojsonpath='{.items[*].spec.ports[0].nodePort}'|tr'''

')"

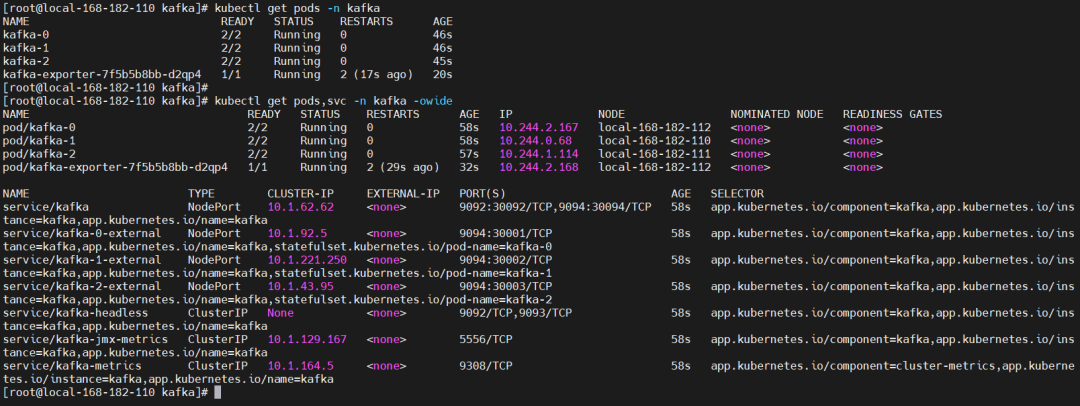

查看 pod 状态

kubectlgetpods,svc-nkafka-owide

4)测试验证

#登录zookeeperpod

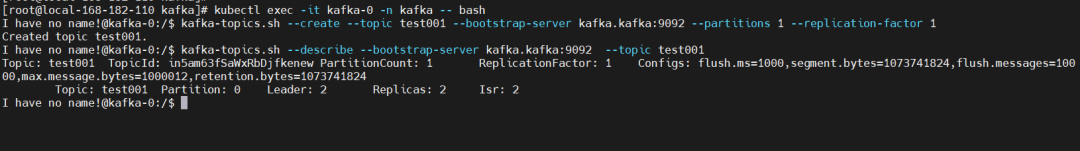

kubectlexec-itkafka-0-nkafka--bash

1、创建 Topic(一个副本一个分区)

--create:指定创建topic动作

--topic:指定新建topic的名称

--bootstrap-server:指定kafka连接地址

--config:指定当前topic上有效的参数值,参数列表参考文档为:Topic-levelconfiguration

--partitions:指定当前创建的kafka分区数量,默认为1个

--replication-factor:指定每个分区的复制因子个数,默认1个

kafka-topics.sh--create--topictest001--bootstrap-serverkafka.kafka:9092--partitions1--replication-factor1

#查看

kafka-topics.sh--describe--bootstrap-serverkafka.kafka:9092--topictest001

2、查看 Topic 列表

kafka-topics.sh--list--bootstrap-serverkafka.kafka:9092

3、生产者/消费者测试

【生产者】

kafka-console-producer.sh--broker-listkafka.kafka:9092--topictest001

{"id":"1","name":"n1","age":"20"}

{"id":"2","name":"n2","age":"21"}

{"id":"3","name":"n3","age":"22"}

【消费者】

#从头开始消费

kafka-console-consumer.sh--bootstrap-serverkafka.kafka:9092--topictest001--from-beginning

#指定从分区的某个位置开始消费,这里只指定了一个分区,可以多写几行或者遍历对应的所有分区

kafka-console-consumer.sh--bootstrap-serverkafka.kafka:9092--topictest001--partition0--offset100--grouptest001

4、查看数据积压

kafka-consumer-groups.sh--bootstrap-serverkafka.kafka:9092--describe--grouptest001

5、删除 topic

kafka-topics.sh--delete--topictest001--bootstrap-serverkafka.kafka:9092

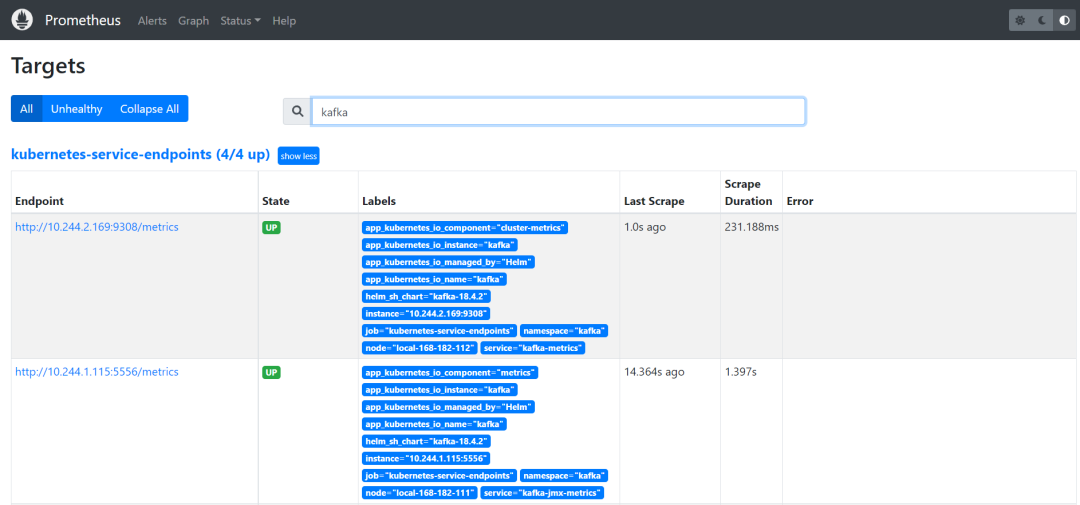

5)Prometheus 监控

Prometheus:

可以通过命令查看采集数据

kubectlget--rawhttp://10.244.2.165:9308/metrics

Grafana:https://grafana.k8s.local/

账号:admin,密码通过下面命令获取

kubectlgetsecret--namespacegrafanagrafana-ojsonpath="{.data.admin-password}"|base64--decode;echo

导入 grafana 模板,集群资源监控:11962

官方模块下载地址:

6)卸载

helmuninstallkafka-nkafka

kubectldeletepod-nkafka`kubectlgetpod-nkafka|awk'NR>1{print$1}'`--force

kubectlpatchnskafka-p'{"metadata":{"finalizers":null}}'

kubectldeletenskafka--force

zookeeper + kafka on k8s 环境部署 就先到这里了,小伙伴有任何疑问,欢迎给我留言!

-

数据库

+关注

关注

7文章

3855浏览量

64799 -

kafka

+关注

关注

0文章

52浏览量

5246 -

zookeeper

+关注

关注

0文章

34浏览量

3715

原文标题:14 张图详解 Zookeeper + Kafka on K8S 环境部署

文章出处:【微信号:magedu-Linux,微信公众号:马哥Linux运维】欢迎添加关注!文章转载请注明出处。

发布评论请先 登录

相关推荐

zookeeper+kafka on k8s环境部署

zookeeper+kafka on k8s环境部署

评论