前文:

kubernetes:集群部署

kubernetes:应用部署与访问

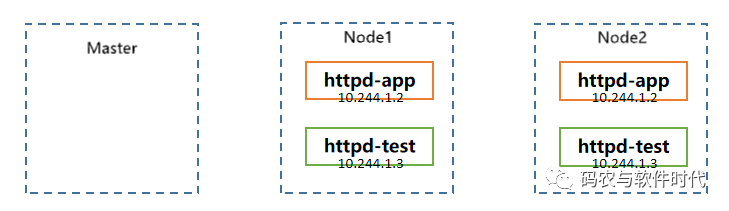

部署后的视图为:

在此基础上,本文研究flannel网络的数据流视图。

一、flannel网络的数据流转是怎样的?

(一)node1节点:

1、查询node1节点详细信息,flannel的Backend类型为vxlan。

root@master: kubectl describe node node1

Name: node1

Roles: node

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1

kubernetes.io/os=linux

node-role.kubernetes.io/node=

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"2a:95:ed:10:e4:56"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 30.0.1.160

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

2、查询node节点信息,node1部署httpd-app、httpd-test两个POD,node2同样部署httpd-app、httpd-test另外两个POD。

root@k8s:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

httpd-app-675b65488d-6kgk6 1/1 Running 0 2d5h 10.244.2.2 node2

httpd-app-675b65488d-9w69v 1/1 Running 0 2d5h 10.244.1.2 node1

httpd-test-fd769fcb7-nbqsn 1/1 Running 0 32h 10.244.2.3 node2

httpd-test-fd769fcb7-nnm99 1/1 Running 0 32h 10.244.1.3 node1

3、进入node1节点httpd-app-675b65488d-9w69v,执行ip addr,查询端口信息。

初次执行ip addr命令提示ip not found,需要安装iproute2。

root@k8s:~# kubectl exec -it httpd-app-675b65488d-9w69v /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# ip addr

/bin/sh: 6: ip: not found

# apt-get install iproute2

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libatm1 libbpf0 libbsd0 libcap2 libcap2-bin libelf1 libmd0 libmnl0 libpam-cap libxtables12

Suggested packages:

iproute2-doc

The following NEW packages will be installed:

iproute2 libatm1 libbpf0 libbsd0 libcap2 libcap2-bin libelf1 libmd0 libmnl0 libpam-cap libxtables12

0 upgraded, 11 newly installed, 0 to remove and 0 not upgraded.

Need to get 1530 kB of archives.

After this operation, 4960 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://deb.debian.org/debian bullseye/main amd64 libelf1 amd64 0.183-1 [165 kB]

Get:2 http://deb.debian.org/debian bullseye/main amd64 libbpf0 amd64 1:0.3-2 [98.3 kB]

Get:3 http://deb.debian.org/debian bullseye/main amd64 libmd0 amd64 1.0.3-3 [28.0 kB]

Get:4 http://deb.debian.org/debian bullseye/main amd64 libbsd0 amd64 0.11.3-1 [108 kB]

Get:5 http://deb.debian.org/debian bullseye/main amd64 libcap2 amd64 1:2.44-1 [23.6 kB]

Get:6 http://deb.debian.org/debian bullseye/main amd64 libmnl0 amd64 1.0.4-3 [12.5 kB]

Get:7 http://deb.debian.org/debian bullseye/main amd64 libxtables12 amd64 1.8.7-1 [45.1 kB]

Get:8 http://deb.debian.org/debian bullseye/main amd64 libcap2-bin amd64 1:2.44-1 [32.6 kB]

Get:9 http://deb.debian.org/debian bullseye/main amd64 iproute2 amd64 5.10.0-4 [930 kB]

Get:10 http://deb.debian.org/debian bullseye/main amd64 libatm1 amd64 1:2.5.1-4 [71.3 kB]

Get:11 http://deb.debian.org/debian bullseye/main amd64 libpam-cap amd64 1:2.44-1 [15.4 kB]

Fetched 1530 kB in 1min 2s (24.8 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package libelf1:amd64.

(Reading database ... 6834 files and directories currently installed.)

Preparing to unpack .../00-libelf1_0.183-1_amd64.deb ...

Unpacking libelf1:amd64 (0.183-1) ...

Selecting previously unselected package libbpf0:amd64.

Preparing to unpack .../01-libbpf0_1%3a0.3-2_amd64.deb ...

Unpacking libbpf0:amd64 (1:0.3-2) ...

Selecting previously unselected package libmd0:amd64.

Preparing to unpack .../02-libmd0_1.0.3-3_amd64.deb ...

Unpacking libmd0:amd64 (1.0.3-3) ...

Selecting previously unselected package libbsd0:amd64.

Preparing to unpack .../03-libbsd0_0.11.3-1_amd64.deb ...

Unpacking libbsd0:amd64 (0.11.3-1) ...

Selecting previously unselected package libcap2:amd64.

Preparing to unpack .../04-libcap2_1%3a2.44-1_amd64.deb ...

Unpacking libcap2:amd64 (1:2.44-1) ...

Selecting previously unselected package libmnl0:amd64.

Preparing to unpack .../05-libmnl0_1.0.4-3_amd64.deb ...

Unpacking libmnl0:amd64 (1.0.4-3) ...

Selecting previously unselected package libxtables12:amd64.

Preparing to unpack .../06-libxtables12_1.8.7-1_amd64.deb ...

Unpacking libxtables12:amd64 (1.8.7-1) ...

Selecting previously unselected package libcap2-bin.

Preparing to unpack .../07-libcap2-bin_1%3a2.44-1_amd64.deb ...

Unpacking libcap2-bin (1:2.44-1) ...

Selecting previously unselected package iproute2.

Preparing to unpack .../08-iproute2_5.10.0-4_amd64.deb ...

Unpacking iproute2 (5.10.0-4) ...

Selecting previously unselected package libatm1:amd64.

Preparing to unpack .../09-libatm1_1%3a2.5.1-4_amd64.deb ...

Unpacking libatm1:amd64 (1:2.5.1-4) ...

Selecting previously unselected package libpam-cap:amd64.

Preparing to unpack .../10-libpam-cap_1%3a2.44-1_amd64.deb ...

Unpacking libpam-cap:amd64 (1:2.44-1) ...

Setting up libatm1:amd64 (1:2.5.1-4) ...

Setting up libcap2:amd64 (1:2.44-1) ...

Setting up libcap2-bin (1:2.44-1) ...

Setting up libmnl0:amd64 (1.0.4-3) ...

Setting up libxtables12:amd64 (1.8.7-1) ...

Setting up libmd0:amd64 (1.0.3-3) ...

Setting up libbsd0:amd64 (0.11.3-1) ...

Setting up libelf1:amd64 (0.183-1) ...

Setting up libpam-cap:amd64 (1:2.44-1) ...

debconf: unable to initialize frontend: Dialog

debconf: (No usable dialog-like program is installed, so the dialog based frontend cannot be used. at /usr/share/perl5/Debconf/FrontEnd/Dialog.pm line 78.)

debconf: falling back to frontend: Readline

debconf: unable to initialize frontend: Readline

debconf: (Can't locate Term/ReadLine.pm in @INC (you may need to install the Term::ReadLine module) (@INC contains: /etc/perl /usr/local/lib/x86_64-linux-gnu/perl/5.32.1 /usr/local/share/perl/5.32.1 /usr/lib/x86_64-linux-gnu/perl5/5.32 /usr/share/perl5 /usr/lib/x86_64-linux-gnu/perl-base /usr/lib/x86_64-linux-gnu/perl/5.32 /usr/share/perl/5.32 /usr/local/lib/site_perl) at /usr/share/perl5/Debconf/FrontEnd/Readline.pm line 7.)

debconf: falling back to frontend: Teletype

Setting up libbpf0:amd64 (1:0.3-2) ...

Setting up iproute2 (5.10.0-4) ...

debconf: unable to initialize frontend: Dialog

debconf: (No usable dialog-like program is installed, so the dialog based frontend cannot be used. at /usr/share/perl5/Debconf/FrontEnd/Dialog.pm line 78.)

debconf: falling back to frontend: Readline

debconf: unable to initialize frontend: Readline

debconf: (Can't locate Term/ReadLine.pm in @INC (you may need to install the Term::ReadLine module) (@INC contains: /etc/perl /usr/local/lib/x86_64-linux-gnu/perl/5.32.1 /usr/local/share/perl/5.32.1 /usr/lib/x86_64-linux-gnu/perl5/5.32 /usr/share/perl5 /usr/lib/x86_64-linux-gnu/perl-base /usr/lib/x86_64-linux-gnu/perl/5.32 /usr/share/perl/5.32 /usr/local/lib/site_perl) at /usr/share/perl5/Debconf/FrontEnd/Readline.pm line 7.)

debconf: falling back to frontend: Teletype

Processing triggers for libc-bin (2.31-13+deb11u2) ...

# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if6: mtu 1400 qdisc noqueue state UP group default

link/ether 4a:1f:b9:9d:30:75 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.1.2/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

# ip route

default via 10.244.1.1 dev eth0

10.244.0.0/16 via 10.244.1.1 dev eth0

10.244.1.0/24 dev eth0 proto kernel scope link src 10.244.1.2

10.244.1.2的数据包从eth0出来,eth0为Veth设备,对端为@if6,也就是宿主机对应的6: veth76d1fce3@if3,这两者组成veth对。

4、进入宿主机,执行ip addr进行查看:

root@k8s:# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1450 qdisc fq_codel state UP group default qlen 1000

link/ether fa:16:3e:69:98:f3 brd ff:ff:ff:ff:ff:ff

inet 30.0.1.160/24 brd 30.0.1.255 scope global dynamic ens3

valid_lft 19286sec preferred_lft 19286sec

inet6 fe80::f816:3eff:fe69:98f3/64 scope link

valid_lft forever preferred_lft forever

3: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:62:9b:ec:f4 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: mtu 1400 qdisc noqueue state UNKNOWN group default

link/ether 2a:95:ed:10:e4:56 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.0/32 brd 10.244.1.0 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::2895:edff:fe10:e456/64 scope link

valid_lft forever preferred_lft forever

5: cni0: mtu 1400 qdisc noqueue state UP group default qlen 1000

link/ether 36:a5:21:90:7c:9a brd ff:ff:ff:ff:ff:ff

inet 10.244.1.1/24 brd 10.244.1.255 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::34a5:21ff:fe90:7c9a/64 scope link

valid_lft forever preferred_lft forever

6: veth76d1fce3@if3: mtu 1400 qdisc noqueue master cni0 state UP group default

link/ether 16:ae:4d:3a:e9:d8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::14ae:4dff:fe3a:e9d8/64 scope link

valid_lft forever preferred_lft forever

7: veth16e5e638@if3: mtu 1400 qdisc noqueue master cni0 state UP group default

link/ether f6:93:97:52:2e:5a brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::f493:97ff:fe52:2e5a/64 scope link

valid_lft forever preferred_lft forever

宿主机对应的6为veth76d1fce3。

或者,通过工具ethtool查询。如没安装,则执行apt-get install ethtool:

# ethtool -S eth0

/bin/sh: 27: ethtool: not found

# apt-get install ethtool

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following NEW packages will be installed:

ethtool

0 upgraded, 1 newly installed, 0 to remove and 0 not upgraded.

Need to get 182 kB of archives.

After this operation, 611 kB of additional disk space will be used.

Get:1 http://deb.debian.org/debian bullseye/main amd64 ethtool amd64 1:5.9-1 [182 kB]

Fetched 182 kB in 1s (142 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package ethtool.

(Reading database ... 7124 files and directories currently installed.)

Preparing to unpack .../ethtool_1%3a5.9-1_amd64.deb ...

Unpacking ethtool (1:5.9-1) ...

Setting up ethtool (1:5.9-1) ...

# ethtool -S eth0

NIC statistics:

peer_ifindex: 6

5、veth设备veth76d1fce3在网桥cni0上,数据包便流转到cni0。

root@k8s:~# brctl show

bridge namebridge idSTP enabledinterfaces

cni08000.36a521907c9anoveth16e5e638

veth76d1fce3

docker08000.0242629becf4no

6、cni0的数据包将走到网关10.244.1.0

root@k8s:~# ifconfig

cni0: flags=4163 mtu 1400

inet 10.244.1.1 netmask 255.255.255.0 broadcast 10.244.1.255

inet6 fe80::34a5:21ff:fe90:7c9a prefixlen 64 scopeid 0x20

ether 36:a5:21:90:7c:9a txqueuelen 1000 (Ethernet)

RX packets 5683 bytes 328382 (328.3 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6677 bytes 10746482 (10.7 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1400

inet 10.244.1.0 netmask 255.255.255.255 broadcast 10.244.1.0

inet6 fe80::2895:edff:fe10:e456 prefixlen 64 scopeid 0x20

ether 2a:95:ed:10:e4:56 txqueuelen 0 (Ethernet)

RX packets 116 bytes 11090 (11.0 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 101 bytes 9963 (9.9 KB)

TX errors 0 dropped 91 overruns 0 carrier 0 collisions 0

根据路由匹配规则,目的地址为10.244.2.2(假定ping操作)的数据包将会交由flannel.1设备处理。

root@k8s:# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 30.0.1.1 0.0.0.0 UG 100 0 0 ens3

10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.1.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1

30.0.1.0 0.0.0.0 255.255.255.0 U 0 0 0 ens3

169.254.169.254 30.0.1.1 255.255.255.255 UGH 100 0 0 ens3

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

而flannel.1为VTEP设备。

root@k8s:~# ip -d link show flannel.1

4: flannel.1: mtu 1400 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether 2a:95:ed:10:e4:56 brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 30.0.1.160 dev ens3 srcport 0 0 dstport 8472 nolearning ttl inherit ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

(二)node2节点

1、查询node2节点的网络设备信息:ens3为宿主机的eth端口,cni0为网桥,flannel.1为VTEP设备。

root@k8s:/# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1450 qdisc fq_codel state UP group default qlen 1000

link/ether fa:16:3e:eb:3a:5e brd ff:ff:ff:ff:ff:ff

inet 30.0.1.47/24 brd 30.0.1.255 scope global dynamic ens3

valid_lft 61438sec preferred_lft 61438sec

inet6 fe80::f816:3eff:feeb:3a5e/64 scope link

valid_lft forever preferred_lft forever

3: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9c:0c:bb:08 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: mtu 1400 qdisc noqueue state UNKNOWN group default

link/ether 72:c8:ad:5f:87:07 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.0/32 brd 10.244.2.0 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::70c8:adff:fe5f:8707/64 scope link

valid_lft forever preferred_lft forever

5: cni0: mtu 1400 qdisc noqueue state UP group default qlen 1000

link/ether de:fb:ba:bd:46:44 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.1/24 brd 10.244.2.255 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::dcfb:baff:febd:4644/64 scope link

valid_lft forever preferred_lft forever

6: veth79a7513f@if3: mtu 1400 qdisc noqueue master cni0 state UP group default

link/ether de:88:ba:a5:77:cb brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::dc88:baff:fea5:77cb/64 scope link

valid_lft forever preferred_lft forever

7: vethd0184a65@if3: mtu 1400 qdisc noqueue master cni0 state UP group default

link/ether 5a:6f:a9:bc:09:27 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::586f:a9ff:febc:927/64 scope link

valid_lft forever preferred_lft forever

root@k8s:/#

2、通过vxlan隧道,flannel.1会接收到来自node1节点的数据包,根据目的IP地址是10.244.2.2,进一步转发到cni0。

root@k8s:/# ip route

default via 30.0.1.1 dev ens3 proto dhcp src 30.0.1.47 metric 100

10.244.0.0/24 via 10.244.0.0 dev flannel.1 onlink

10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

10.244.2.0/24 dev cni0 proto kernel scope link src 10.244.2.1

30.0.1.0/24 dev ens3 proto kernel scope link src 30.0.1.47

169.254.169.254 via 30.0.1.1 dev ens3 proto dhcp src 30.0.1.47 metric 100

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

3、cni0的端口设备为veth79a7513f、vethd0184a65。

root@k8s:/# brctl show cni0

bridge name bridge idSTP enabled interfaces

cni08000.defbbabd4644 no veth79a7513f

vethd0184a65

4、进入到httpd-app-675b65488d-6kgk6 pod中,查找veth设备对为veth79a7513f -- eth0@if6。

root@k8s:~# kubectl exec -it httpd-app-675b65488d-6kgk6 /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue state UP group default

link/ether 0e:da:e9:4e:f0:bf brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.244.2.2/24 brd 10.244.2.255 scope global eth0

valid_lft forever preferred_lft forever

通过node1节点和node2节点的分析,则可得到数据流转图为:

二、网络抓包分析数据流

在node1节点httpd-app(10.244.1.2) ping node2节点httpd-app(10.244.2.2)。

通过在node1节点的pod路由追踪traceroute发现其链路为:

# traceroute 10.244.2.2

traceroute to 10.244.2.2 (10.244.2.2), 30 hops max, 60 byte packets

1 10.244.1.1 (10.244.1.1) 0.163 ms 0.025 ms 0.017 ms

2 10.244.2.0 (10.244.2.0) 1.269 ms 1.185 ms 1.097 ms

3 10-244-2-2.httpd-app.default.svc.cluster.local (10.244.2.2) 1.409 ms 1.357 ms 1.303 ms

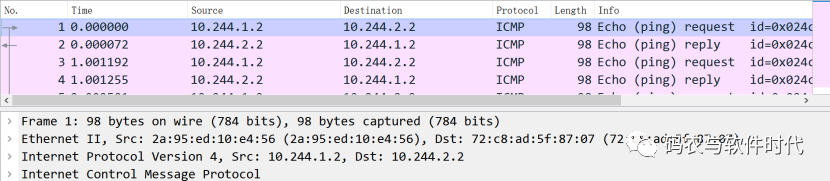

1、node1节点的抓包点放在:flannel.1、ens3:

root@k8s:/# tcpdump -n -vv -i flannel.1 -w /var/tmp/n1-flannel.cap

root@k8s:/# tcpdump -n -vv -i ens3 -w /var/tmp/n1-ens3.cap

Node1--flannel.1

Node1--ens3

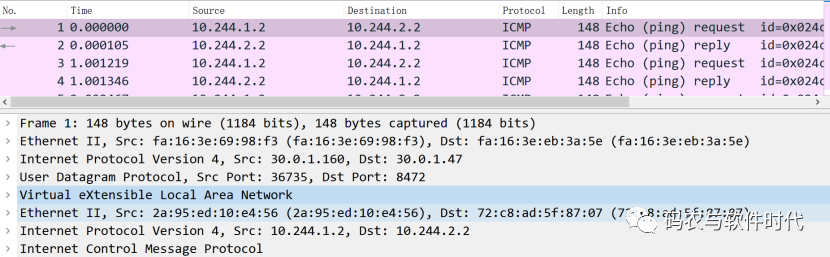

2、node2节点的抓包点放在:flannel.1、ens3:

root@k8s:/# tcpdump -n -vv -i flannel.1 -w /var/tmp/n2-flannel.cap

root@k8s:/# tcpdump -n -vv -i ens3 -w /var/tmp/n2-ens3.cap

Node2--flannel.1

Node2--ens3

通过flannel.1设备上抓的数据包来看,vxlan包没有在flannel.1获取到,而在ens3上是vxlan包的。vxlan解封包应该是在flanneld上进行的。

-

节点

+关注

关注

0文章

220浏览量

24546 -

node

+关注

关注

0文章

23浏览量

5986 -

VxLAN

+关注

关注

0文章

24浏览量

3879

发布评论请先 登录

相关推荐

Kubernetes 网络模型如何实现常见网络任务

Kubernetes的Device Plugin设计解读

K8s 从懵圈到熟练 – 集群网络详解

在Kubernetes上运行Kubernetes

Kubernetes网络隔离NetworkPolicy实验

Kubernetes网络模型介绍以及如何实现常见网络任务

Kubernetes网络模型的基础知识

在Kubernetes集群发生网络异常时如何排查

跟踪Kubernetes的网络流量路径

Kubernetes中的网络模型

各种网络组件在 Kubernetes 集群中是如何交互的

探讨Kubernetes中的网络模型(各种网络模型分析)

Kubernetes的flannel网络

Kubernetes的flannel网络

评论