随着在线社交媒体和评论平台的激增,大量的意见数据被记录下来,具有支持决策过程的巨大潜力。情感分析研究人们在其生成的文本中的情感,例如产品评论、博客评论和论坛讨论。它在政治(例如,公众对政策的情绪分析)、金融(例如,市场情绪分析)和市场营销(例如,产品研究和品牌管理)等领域有着广泛的应用。

由于情绪可以被分类为离散的极性或尺度(例如,积极和消极),我们可以将情绪分析视为文本分类任务,它将可变长度的文本序列转换为固定长度的文本类别。在本章中,我们将使用斯坦福的大型电影评论数据集进行情感分析。它由一个训练集和一个测试集组成,其中包含从 IMDb 下载的 25000 条电影评论。在这两个数据集中,“正面”和“负面”标签的数量相等,表明不同的情绪极性。

import os import torch from torch import nn from d2l import torch as d2l

import os from mxnet import np, npx from d2l import mxnet as d2l npx.set_np()

16.1.1。读取数据集

首先,在路径中下载并解压这个 IMDb 评论数据集 ../data/aclImdb。

#@save

d2l.DATA_HUB['aclImdb'] = (d2l.DATA_URL + 'aclImdb_v1.tar.gz',

'01ada507287d82875905620988597833ad4e0903')

data_dir = d2l.download_extract('aclImdb', 'aclImdb')

Downloading ../data/aclImdb_v1.tar.gz from http://d2l-data.s3-accelerate.amazonaws.com/aclImdb_v1.tar.gz...

#@save

d2l.DATA_HUB['aclImdb'] = (d2l.DATA_URL + 'aclImdb_v1.tar.gz',

'01ada507287d82875905620988597833ad4e0903')

data_dir = d2l.download_extract('aclImdb', 'aclImdb')

Downloading ../data/aclImdb_v1.tar.gz from http://d2l-data.s3-accelerate.amazonaws.com/aclImdb_v1.tar.gz...

接下来,阅读训练和测试数据集。每个示例都是评论及其标签:1 表示“正面”,0 表示“负面”。

#@save def read_imdb(data_dir, is_train): """Read the IMDb review dataset text sequences and labels.""" data, labels = [], [] for label in ('pos', 'neg'): folder_name = os.path.join(data_dir, 'train' if is_train else 'test', label) for file in os.listdir(folder_name): with open(os.path.join(folder_name, file), 'rb') as f: review = f.read().decode('utf-8').replace('n', '') data.append(review) labels.append(1 if label == 'pos' else 0) return data, labels train_data = read_imdb(data_dir, is_train=True) print('# trainings:', len(train_data[0])) for x, y in zip(train_data[0][:3], train_data[1][:3]): print('label:', y, 'review:', x[:60])

# trainings: 25000 label: 1 review: Henry Hathaway was daring, as well as enthusiastic, for his label: 1 review: An unassuming, subtle and lean film, "The Man in the White S label: 1 review: Eddie Murphy really made me laugh my ass off on this HBO sta

#@save

def read_imdb(data_dir, is_train):

"""Read the IMDb review dataset text sequences and labels."""

data, labels = [], []

for label in ('pos', 'neg'):

folder_name = os.path.join(data_dir, 'train' if is_train else 'test',

label)

for file in os.listdir(folder_name):

with open(os.path.join(folder_name, file), 'rb') as f:

review = f.read().decode('utf-8').replace('n', '')

data.append(review)

labels.append(1 if label == 'pos' else 0)

return data, labels

train_data = read_imdb(data_dir, is_train=True)

print('# trainings:', len(train_data[0]))

for x, y in zip(train_data[0][:3], train_data[1][:3]):

print('label:', y, 'review:', x[:60])

# trainings: 25000 label: 1 review: Henry Hathaway was daring, as well as enthusiastic, for his label: 1 review: An unassuming, subtle and lean film, "The Man in the White S label: 1 review: Eddie Murphy really made me laugh my ass off on this HBO sta

16.1.2。预处理数据集

将每个单词视为一个标记并过滤掉出现次数少于 5 次的单词,我们从训练数据集中创建了一个词汇表。

train_tokens = d2l.tokenize(train_data[0], token='word') vocab = d2l.Vocab(train_tokens, min_freq=5, reserved_tokens=[''])

train_tokens = d2l.tokenize(train_data[0], token='word') vocab = d2l.Vocab(train_tokens, min_freq=5, reserved_tokens=[''])

标记化后,让我们绘制以标记为单位的评论长度直方图。

d2l.set_figsize()

d2l.plt.xlabel('# tokens per review')

d2l.plt.ylabel('count')

d2l.plt.hist([len(line) for line in train_tokens], bins=range(0, 1000, 50));

d2l.set_figsize()

d2l.plt.xlabel('# tokens per review')

d2l.plt.ylabel('count')

d2l.plt.hist([len(line) for line in train_tokens], bins=range(0, 1000, 50));

正如我们所料,评论的长度各不相同。为了每次处理一小批此类评论,我们将每个评论的长度设置为 500,并进行截断和填充,这类似于第 10.5 节中机器翻译数据集的预处理 步骤。

num_steps = 500 # sequence length train_features = torch.tensor([d2l.truncate_pad( vocab[line], num_steps, vocab['']) for line in train_tokens]) print(train_features.shape)

torch.Size([25000, 500])

num_steps = 500 # sequence length train_features = np.array([d2l.truncate_pad( vocab[line], num_steps, vocab['']) for line in train_tokens]) print(train_features.shape)

(25000, 500)

16.1.3。创建数据迭代器

现在我们可以创建数据迭代器。在每次迭代中,返回一小批示例。

train_iter = d2l.load_array((train_features, torch.tensor(train_data[1])), 64)

for X, y in train_iter:

print('X:', X.shape, ', y:', y.shape)

break

print('# batches:', len(train_iter))

X: torch.Size([64, 500]) , y: torch.Size([64]) # batches: 391

train_iter = d2l.load_array((train_features, train_data[1]), 64)

for X, y in train_iter:

print('X:', X.shape, ', y:', y.shape)

break

print('# batches:', len(train_iter))

X: (64, 500) , y: (64,) # batches: 391

16.1.4。把它们放在一起

最后,我们将上述步骤包装到函数中load_data_imdb。它返回训练和测试数据迭代器以及 IMDb 评论数据集的词汇表。

#@save

def load_data_imdb(batch_size, num_steps=500):

"""Return data iterators and the vocabulary of the IMDb review dataset."""

data_dir = d2l.download_extract('aclImdb', 'aclImdb')

train_data = read_imdb(data_dir, True)

test_data = read_imdb(data_dir, False)

train_tokens = d2l.tokenize(train_data[0], token='word')

test_tokens = d2l.tokenize(test_data[0], token='word')

vocab = d2l.Vocab(train_tokens, min_freq=5)

train_features = torch.tensor([d2l.truncate_pad(

vocab[line], num_steps, vocab['']) for line in train_tokens])

test_features = torch.tensor([d2l.truncate_pad(

vocab[line], num_steps, vocab['']) for line in test_tokens])

train_iter = d2l.load_array((train_features, torch.tensor(train_data[1])),

batch_size)

test_iter = d2l.load_array((test_features, torch.tensor(test_data[1])),

batch_size,

is_train=False)

return train_iter, test_iter, vocab

#@save

def load_data_imdb(batch_size, num_steps=500):

"""Return data iterators and the vocabulary of the IMDb review dataset."""

data_dir = d2l.download_extract('aclImdb', 'aclImdb')

train_data = read_imdb(data_dir, True)

test_data = read_imdb(data_dir, False)

train_tokens = d2l.tokenize(train_data[0], token='word')

test_tokens = d2l.tokenize(test_data[0], token='word')

vocab = d2l.Vocab(train_tokens, min_freq=5)

train_features = np.array([d2l.truncate_pad(

vocab[line], num_steps, vocab['']) for line in train_tokens])

test_features = np.array([d2l.truncate_pad(

vocab[line], num_steps, vocab['']) for line in test_tokens])

train_iter = d2l.load_array((train_features, train_data[1]), batch_size)

test_iter = d2l.load_array((test_features, test_data[1]), batch_size,

is_train=False)

return train_iter, test_iter, vocab

16.1.5。概括

情感分析研究人们在其生成的文本中的情感,这被认为是将变长文本序列转换为固定长度文本类别的文本分类问题。

预处理后,我们可以将斯坦福的大型电影评论数据集(IMDb 评论数据集)加载到带有词汇表的数据迭代器中。

16.1.6。练习

我们可以修改本节中的哪些超参数来加速训练情绪分析模型?

你能实现一个函数来将亚马逊评论的数据集加载到数据迭代器和标签中以进行情感分析吗?

-

数据集

+关注

关注

4文章

1211浏览量

24890 -

pytorch

+关注

关注

2文章

808浏览量

13404

发布评论请先 登录

相关推荐

高阶API构建模型和数据集使用

在机智云上创建项目和数据集

Rough集和数据挖掘应用于案件综合分析

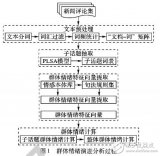

基于PLSA模型的群体情绪演进分析

利用Python和PyTorch处理面向对象的数据集

利用 Python 和 PyTorch 处理面向对象的数据集(2)) :创建数据集对象

PyTorch教程-16.1. 情绪分析和数据集

PyTorch教程-16.1. 情绪分析和数据集

评论