正如我们在前面的第 19.2 节中看到的那样,由于超参数配置的评估代价高昂,我们可能不得不等待数小时甚至数天才能在随机搜索返回良好的超参数配置之前。在实践中,我们经常访问资源池,例如同一台机器上的多个 GPU 或具有单个 GPU 的多台机器。这就引出了一个问题:我们如何有效地分布随机搜索?

通常,我们区分同步和异步并行超参数优化(见图19.3.1)。在同步设置中,我们等待所有并发运行的试验完成,然后再开始下一批。考虑包含超参数的配置空间,例如过滤器的数量或深度神经网络的层数。包含更多过滤器层数的超参数配置自然会花费更多时间完成,并且同一批次中的所有其他试验都必须在同步点(图 19.3.1 中的灰色区域)等待,然后我们才能 继续优化过程。

在异步设置中,我们会在资源可用时立即安排新的试验。这将以最佳方式利用我们的资源,因为我们可以避免任何同步开销。对于随机搜索,每个新的超参数配置都是独立于所有其他配置选择的,特别是没有利用任何先前评估的观察结果。这意味着我们可以简单地异步并行化随机搜索。这对于根据先前的观察做出决定的更复杂的方法来说并不是直截了当的(参见 第 19.5 节)。虽然我们需要访问比顺序设置更多的资源,但异步随机搜索表现出线性加速,因为达到了一定的性能K如果K试验可以并行进行。

图 19.3.1同步或异步分配超参数优化过程。与顺序设置相比,我们可以减少整体挂钟时间,同时保持总计算量不变。在掉队的情况下,同步调度可能会导致工人闲置。

在本笔记本中,我们将研究异步随机搜索,其中试验在同一台机器上的多个 Python 进程中执行。分布式作业调度和执行很难从头开始实现。我们将使用Syne Tune (Salinas等人,2022 年),它为我们提供了一个简单的异步 HPO 接口。Syne Tune 旨在与不同的执行后端一起运行,欢迎感兴趣的读者研究其简单的 API,以了解有关分布式 HPO 的更多信息。

import logging from d2l import torch as d2l logging.basicConfig(level=logging.INFO) from syne_tune import StoppingCriterion, Tuner from syne_tune.backend.python_backend import PythonBackend from syne_tune.config_space import loguniform, randint from syne_tune.experiments import load_experiment from syne_tune.optimizer.baselines import RandomSearch

INFO:root:SageMakerBackend is not imported since dependencies are missing. You can install them with pip install 'syne-tune[extra]' AWS dependencies are not imported since dependencies are missing. You can install them with pip install 'syne-tune[aws]' or (for everything) pip install 'syne-tune[extra]' AWS dependencies are not imported since dependencies are missing. You can install them with pip install 'syne-tune[aws]' or (for everything) pip install 'syne-tune[extra]' INFO:root:Ray Tune schedulers and searchers are not imported since dependencies are missing. You can install them with pip install 'syne-tune[raytune]' or (for everything) pip install 'syne-tune[extra]'

19.3.1。目标函数

首先,我们必须定义一个新的目标函数,以便它现在通过回调将性能返回给 Syne Tune report。

def hpo_objective_lenet_synetune(learning_rate, batch_size, max_epochs): from syne_tune import Reporter from d2l import torch as d2l model = d2l.LeNet(lr=learning_rate, num_classes=10) trainer = d2l.HPOTrainer(max_epochs=1, num_gpus=1) data = d2l.FashionMNIST(batch_size=batch_size) model.apply_init([next(iter(data.get_dataloader(True)))[0]], d2l.init_cnn) report = Reporter() for epoch in range(1, max_epochs + 1): if epoch == 1: # Initialize the state of Trainer trainer.fit(model=model, data=data) else: trainer.fit_epoch() validation_error = trainer.validation_error().cpu().detach().numpy() report(epoch=epoch, validation_error=float(validation_error))

请注意,PythonBackendSyne Tune 需要在函数定义中导入依赖项。

19.3.2。异步调度器

首先,我们定义同时评估试验的工人数量。我们还需要通过定义总挂钟时间的上限来指定我们想要运行随机搜索的时间。

n_workers = 2 # Needs to be <= the number of available GPUs max_wallclock_time = 12 * 60 # 12 minutes

接下来,我们说明要优化的指标以及我们是要最小化还是最大化该指标。即,metric需要对应传递给回调的参数名称report。

mode = "min" metric = "validation_error"

我们使用前面示例中的配置空间。在 Syne Tune 中,该字典也可用于将常量属性传递给训练脚本。我们利用此功能以通过 max_epochs. 此外,我们指定要在 中评估的第一个配置initial_config。

config_space = {

"learning_rate": loguniform(1e-2, 1),

"batch_size": randint(32, 256),

"max_epochs": 10,

}

initial_config = {

"learning_rate": 0.1,

"batch_size": 128,

}

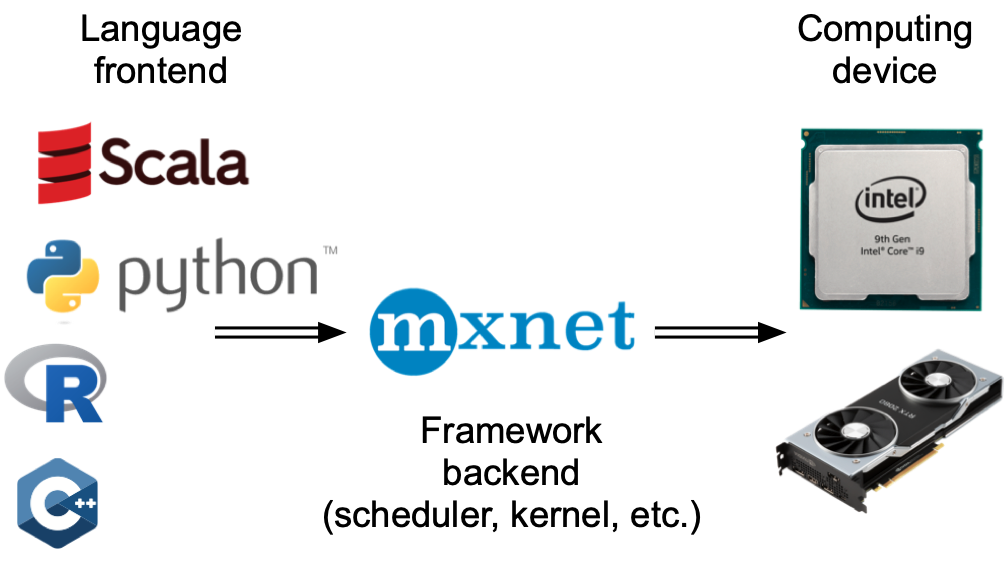

接下来,我们需要指定作业执行的后端。这里我们只考虑本地机器上的分布,其中并行作业作为子进程执行。但是,对于大规模 HPO,我们也可以在集群或云环境中运行它,其中每个试验都会消耗一个完整的实例。

trial_backend = PythonBackend( tune_function=hpo_objective_lenet_synetune, config_space=config_space, )

BasicScheduler我们现在可以为异步随机搜索创建调度程序,其行为与我们在 第 19.2 节中的类似。

scheduler = RandomSearch( config_space, metric=metric, mode=mode, points_to_evaluate=[initial_config], )

INFO:syne_tune.optimizer.schedulers.fifo:max_resource_level = 10, as inferred from config_space INFO:syne_tune.optimizer.schedulers.fifo:Master random_seed = 4033665588

Syne Tune 还具有一个Tuner,其中主要的实验循环和簿记是集中的,调度程序和后端之间的交互是中介的。

stop_criterion = StoppingCriterion(max_wallclock_time=max_wallclock_time) tuner = Tuner( trial_backend=trial_backend, scheduler=scheduler, stop_criterion=stop_criterion, n_workers=n_workers, print_update_interval=int(max_wallclock_time * 0.6), )

让我们运行我们的分布式 HPO 实验。根据我们的停止标准,它将运行大约 12 分钟。

tuner.run()

INFO:syne_tune.tuner:results of trials will be saved on /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691 INFO:root:Detected 8 GPUs INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.1 --batch_size 128 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/0/checkpoints INFO:syne_tune.tuner:(trial 0) - scheduled config {'learning_rate': 0.1, 'batch_size': 128, 'max_epochs': 10} INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.31642002803324326 --batch_size 52 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/1/checkpoints INFO:syne_tune.tuner:(trial 1) - scheduled config {'learning_rate': 0.31642002803324326, 'batch_size': 52, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 0 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.045813161553582046 --batch_size 71 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/2/checkpoints INFO:syne_tune.tuner:(trial 2) - scheduled config {'learning_rate': 0.045813161553582046, 'batch_size': 71, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 1 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.11375402103945391 --batch_size 244 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/3/checkpoints INFO:syne_tune.tuner:(trial 3) - scheduled config {'learning_rate': 0.11375402103945391, 'batch_size': 244, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 2 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.5211657199736571 --batch_size 47 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/4/checkpoints INFO:syne_tune.tuner:(trial 4) - scheduled config {'learning_rate': 0.5211657199736571, 'batch_size': 47, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 3 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.05259930532982774 --batch_size 181 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/5/checkpoints INFO:syne_tune.tuner:(trial 5) - scheduled config {'learning_rate': 0.05259930532982774, 'batch_size': 181, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 5 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.09086002421630578 --batch_size 48 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/6/checkpoints INFO:syne_tune.tuner:(trial 6) - scheduled config {'learning_rate': 0.09086002421630578, 'batch_size': 48, 'max_epochs': 10} INFO:syne_tune.tuner:tuning status (last metric is reported) trial_id status iter learning_rate batch_size max_epochs epoch validation_error worker-time 0 Completed 10 0.100000 128 10 10 0.258109 108.366785 1 Completed 10 0.316420 52 10 10 0.146223 179.660365 2 Completed 10 0.045813 71 10 10 0.311251 143.567631 3 Completed 10 0.113754 244 10 10 0.336094 90.168444 4 InProgress 8 0.521166 47 10 8 0.150257 156.696658 5 Completed 10 0.052599 181 10 10 0.399893 91.044401 6 InProgress 2 0.090860 48 10 2 0.453050 36.693606 2 trials running, 5 finished (5 until the end), 436.55s wallclock-time INFO:syne_tune.tuner:Trial trial_id 4 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.03542833641356924 --batch_size 94 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/7/checkpoints INFO:syne_tune.tuner:(trial 7) - scheduled config {'learning_rate': 0.03542833641356924, 'batch_size': 94, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 6 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.5941192130206245 --batch_size 149 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/8/checkpoints INFO:syne_tune.tuner:(trial 8) - scheduled config {'learning_rate': 0.5941192130206245, 'batch_size': 149, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 7 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.013696247675312455 --batch_size 135 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/9/checkpoints INFO:syne_tune.tuner:(trial 9) - scheduled config {'learning_rate': 0.013696247675312455, 'batch_size': 135, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 8 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.11837221527625114 --batch_size 75 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/10/checkpoints INFO:syne_tune.tuner:(trial 10) - scheduled config {'learning_rate': 0.11837221527625114, 'batch_size': 75, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 9 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.18877290342981604 --batch_size 187 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/11/checkpoints INFO:syne_tune.tuner:(trial 11) - scheduled config {'learning_rate': 0.18877290342981604, 'batch_size': 187, 'max_epochs': 10} INFO:syne_tune.stopping_criterion:reaching max wallclock time (720), stopping there. INFO:syne_tune.tuner:Stopping trials that may still be running. INFO:syne_tune.tuner:Tuning finished, results of trials can be found on /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691 -------------------- Resource summary (last result is reported): trial_id status iter learning_rate batch_size max_epochs epoch validation_error worker-time 0 Completed 10 0.100000 128 10 10.0 0.258109 108.366785 1 Completed 10 0.316420 52 10 10.0 0.146223 179.660365 2 Completed 10 0.045813 71 10 10.0 0.311251 143.567631 3 Completed 10 0.113754 244 10 10.0 0.336094 90.168444 4 Completed 10 0.521166 47 10 10.0 0.146092 190.111242 5 Completed 10 0.052599 181 10 10.0 0.399893 91.044401 6 Completed 10 0.090860 48 10 10.0 0.197369 172.148435 7 Completed 10 0.035428 94 10 10.0 0.414369 112.588123 8 Completed 10 0.594119 149 10 10.0 0.177609 99.182505 9 Completed 10 0.013696 135 10 10.0 0.901235 107.753385 10 InProgress 2 0.118372 75 10 2.0 0.465970 32.484881 11 InProgress 0 0.188773 187 10 - - - 2 trials running, 10 finished (10 until the end), 722.92s wallclock-time validation_error: best 0.1377706527709961 for trial-id 4 --------------------

存储所有评估的超参数配置的日志以供进一步分析。在调整工作期间的任何时候,我们都可以轻松获得目前获得的结果并绘制现任轨迹。

d2l.set_figsize() tuning_experiment = load_experiment(tuner.name) tuning_experiment.plot()

WARNING:matplotlib.legend:No artists with labels found to put in legend. Note that artists whose label start with an underscore are ignored when legend() is called with no argument.

19.3.3。可视化异步优化过程

下面我们可视化每次试验的学习曲线(图中的每种颜色代表一次试验)在异步优化过程中是如何演变的。在任何时间点,同时运行的试验数量与我们的工人数量一样多。一旦一个试验结束,我们立即开始下一个试验,而不是等待其他试验完成。通过异步调度将工作人员的空闲时间减少到最低限度。

d2l.set_figsize([6, 2.5])

results = tuning_experiment.results

for trial_id in results.trial_id.unique():

df = results[results["trial_id"] == trial_id]

d2l.plt.plot(

df["st_tuner_time"],

df["validation_error"],

marker="o"

)

d2l.plt.xlabel("wall-clock time")

d2l.plt.ylabel("objective function")

Text(0, 0.5, 'objective function')

19.3.4。概括

我们可以通过跨并行资源的分布试验大大减少随机搜索的等待时间。一般来说,我们区分同步调度和异步调度。同步调度意味着我们在前一批完成后对新一批超参数配置进行采样。如果我们有掉队者——比其他试验需要更多时间才能完成的试验——我们的工作人员需要在同步点等待。一旦资源可用,异步调度就会评估新的超参数配置,从而确保所有工作人员在任何时间点都很忙。虽然随机搜索易于异步分发并且不需要对实际算法进行任何更改,但其他方法需要进行一些额外的修改。

19.3.5。练习

考虑DropoutMLP在 5.6 节中实现并在 19.2 节的练习 1 中使用的模型。

hpo_objective_dropoutmlp_synetune实施要与 Syne Tune 一起使用的目标函数 。确保您的函数在每个时期后报告验证错误。

使用19.2 节练习 1 的设置,将随机搜索与贝叶斯优化进行比较。如果您使用 SageMaker,请随意使用 Syne Tune 的基准测试工具以并行运行实验。提示:贝叶斯优化作为syne_tune.optimizer.baselines.BayesianOptimization.

对于本练习,您需要在至少具有 4 个 CPU 内核的实例上运行。对于上面使用的方法之一(随机搜索、贝叶斯优化),使用n_workers=1、 n_workers=2、运行实验n_workers=4并比较结果(现任轨迹)。至少对于随机搜索,您应该观察到工人数量的线性比例。提示:为了获得稳健的结果,您可能必须对每次重复多次进行平均。

进阶。本练习的目标是在 Syne Tune 中实施新的调度程序。

创建一个包含 d2lbook 和 syne-tune 源的虚拟环境。

在 Syne Tune 中将第 19.2 节LocalSearcher中的练习 2 作为新的搜索器来实现。提示:阅读 本教程。或者,您可以按照此 示例进行操作。

将您的新产品LocalSearcher与RandomSearch基准 进行比较DropoutMLP。

Discussions

-

gpu

+关注

关注

28文章

4700浏览量

128677 -

异步

+关注

关注

0文章

62浏览量

18032 -

pytorch

+关注

关注

2文章

803浏览量

13142

发布评论请先 登录

相关推荐

怎样使用PyTorch Hub去加载YOLOv5模型

通过Cortex来非常方便的部署PyTorch模型

如何往星光2板子里装pytorch?

PyTorch教程-10.8。波束搜索

PyTorch教程-12.4。随机梯度下降

PyTorch教程-13.2. 异步计算

PyTorch教程-19.3. 异步随机搜索

PyTorch教程-19.3. 异步随机搜索

评论