Fast-moving battery technology poses a question for designers: whether to choose the latest technology for maximum performance or to sacrifice performance for a mature and more reliable technology. The advent of chemistry-independent battery chargers helps to resolve this issue.

Given the mix of battery types and charging requirements in modern systems, chemistry-independent chargers are welcome tools for those who use and maintain portable equipment. Such chargers detect the installed battery's type and accordingly adjust the charging procedure.

Chemistry-independent chargers have several other benefits. For example, they enable OEMs to keep pace with battery developments without costly hardware changes. They also allow users to upgrade a product's battery rather than buy equipment. Also, for systems compatible with the Smart Battery System (SBS) specification, the specified standard interface for charger, battery, and host gives users a choice of any SBS-compatible battery.

Most chargers that can charge more than one battery type must be able to shift their output characteristic from voltage source to current source. They must also be able to monitor a battery's charging current and voltage and, in some cases, its temperature and charge time.

The need to shift on command between voltage source and current source is problematic, because the requirements conflict: The charger's output impedance should be low for a voltage source but high for a current source, resulting in different requirements for stabilization. This capability is difficult to achieve in a single circuit.

On the other hand, stability is often noncritical, because the charger's output voltage and current change slowly during a normal charging cycle. If, however, the charger's input source sees load changes that cause ripple or step changes in its output, as is likely with cheap wall-cube sources, then a compromise on loop stability can cause excessive ripple in the battery-charging voltage or current. This problem is acute for Li-ion batteries, which require tight tolerances on the applied voltage. If the charging voltage for a Li-ion battery is too low, the battery does not charge to its full capacity. If too high, the battery becomes permanently damaged, which is why Li-ion-battery manufacturers usually specify a charging-voltage accuracy better than 1%.

Charging Li-ion and lead-acid batteries requires that the charger apply a constant current and then a constant voltage. The charger monitors the battery voltage to determine when this switchover should occur. A Li-ion or lead-acid battery charger must also monitor the battery voltage to minimize the time that the charger applies a regulated voltage to the battery, because a prolonged "float" interval can damage these batteries. Thus, a charger must sample the battery voltage for all four battery types, to determine the end of charge for NiCd and NiMH types and the switch from current to voltage regulation for lead-acid and Li-ion types.

The battery pack itself requires no intelligence; it needs just a way to inform the charger of its chemistry type and its number of cells. The pack can provide this information using a keyed connector on the battery pack or a stored code that the charger can read. Either way, the charging routine can reside in software, and the installation of a new battery type requires simply updating the software. Thus, the charger can accommodate battery types that were unavailable when the charger debuted.

Committing charging procedures to software also allows manufacturers to extend the life of their products via software upgrades. The consumer can upgrade a battery pack, for example, by simply installing the software upgrade that comes with the battery upgrade. Software also allows manufacturers to upgrade a battery type without changing hardware while building the battery-powered product.

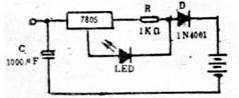

Figure 1. A simple chemistry-independent battery charger combines a microcontroller, IC1, with a battery-charging controller, IC2, that employs linear regulation.

The PIC16C73 ?C, IC2, includes the PWM outputs CCP1 and CCP2. A filtered version of CCP2 drives IC1's VSET pin to control the voltage set point. The CCP1 PWM output controls battery current by setting the voltage on ISET. Using an internal A/D converter that you can access via its AN1 pin, the ?C monitors battery current by measuring the current in terms of voltage at IC1's ISET pin. The ?C monitors battery voltage by reading an internal A/D converter driven by the R5/R6 voltage divider.

The ?C operates at 4MHz, and, to achieve the required accuracy, its PWM output frequency is 25kHz. Each PWM output drives an RC filter followed by a unity-gain op-amp buffer. A 3.3V low-dropout regulator that depends on a reference powers the ?C (both internal to IC1), so the ?C's PWM outputs track this reference. Powering the ?C in this way improves accuracy, because this approach causes the PWM outputs to track variations in the reference voltage.

The voltage at VSET (Pin 6 of IC1), which connects to the internal 1.65V reference through a 20-kilohm resistor, determines the charger's voltage limit. This voltage is the filtered PWM output from the ?C's CCP2 output, and equals 3.3V multiplied by CCP2's duty cycle, D2:

By setting the state of CELL2, Pin 10 of IC1, the user sets the nominal voltage limit at 4.2V or 8.4V, making the charger compatible with a single- or two-cell Li-ion battery. A small adjustment range enables the charger to accommodate the manufacturer-recommended limits. Limiting VADJ to approximately 10% of VLIMIT ensures 1% accuracy for the float voltage, even with a 1% resistor for R1.

This charger can also handle NiCd and NiMH batteries, because they require no float voltage. The maximum charging voltage these types require is typically 1.75V per cell. Thus, the charger of Figure 1 can handle a NiCd or NiMH battery of as many as four cells.

The current sourced by IC1's ISET pin controls the charging current. This current equals 1?A for every millivolt across the current-sense resistor, R2. Terminating ISET with a resistor produces a voltage, and regulating this voltage to 1.65V regulates the charging current. The 20-kilohm values for R3 and R4 set the ISET impedance at 10 kilohms and set the no-load voltage at 3.3 x D1, where D1 is the CCP1 duty cycle. The charging current is as follows:

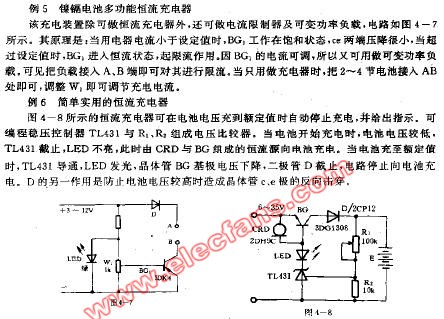

Figure 2. Substituting a switch-mode controller for the linear-regulator controller in Figure 1 produces a relatively efficient circuit whose lower operating temperature lets it operate as part of a portable system.

To achieve the 1% accuracy that Li-ion batteries require, the system includes a 0.2%, 4.096V external reference, IC3. This reference sets the charging levels for IC1 and the PWM-output voltage levels through three SPDT analog switches, IC2. To avoid excessive loading of the reference voltage, which could affect PWM-output accuracy, the ?C receives power from the 5V VL regulator internal to IC1 instead of from the reference. As in Figure 1, the ?C runs at 4MHz, and the PWM outputs run at 25kHz.

A lowpass filter (R1/C1) develops the DC voltage that controls charging voltage via IC1's SETV input. A similar filter, which develops a DC voltage for control of the charging current at the SETI input, also includes a 1-to-4 voltage divider (R2/R3) that establishes the required level of one-fourth the reference voltage. Resistors with 5% accuracy are adequate for achieving the 10%-accuracy charging-current requirement.

The limit for maximum charging voltage is four times the reference voltage, or 16.384V. The maximum allowed charging current depends on the 0.1-ohm current-sense resistor and the current-sense threshold internal to IC1 (0.185V): 0.185V/0.1 ohm = 1.85A. Charger operation depends on the voltage at THM (Pin 9), which in turn depends on the position of the top switch in IC2, which the ?C's RA1 output controls. Connecting THM to the thermistor causes IC1 to shut down when the battery temperature is too high or too low. Connecting THM to ground shuts off the charger.

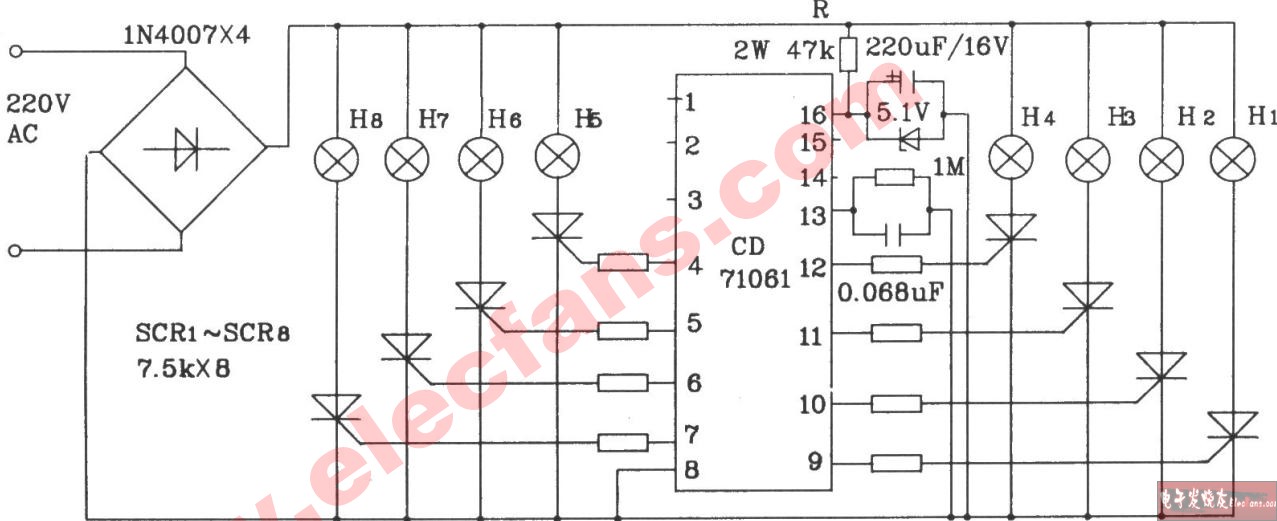

The chemistry-independent battery-charger IC (MAX1647) in Figure 3 is a controller for smart-battery chargers, and it has an SMBus interface compatible with the smart-battery specification. In stepping down from an input voltage to the required voltage or current, the IC provides drive for two external MOSFETs—the switching transistor and the synchronous rectifier—from the DH and DL outputs, respectively. The switch-mode controller offers better efficiency than a linear type, and the synchronous rectifier offers better efficiency than a diode rectifier at lower battery voltages. These gains in efficiency minimize the circuit's operating temperature and thereby optimize it for portable equipment.

Figure 3. A chemistry-independent battery charger that conforms to the Smart Battery System specification includes a chemistry-independent charger-control IC and an SMBus interface.

To achieve the necessary accuracy, a linear current source generates charging currents of 1mA to 31mA. When the switch-mode current source turns on to provide higher charging currents, this linear source remains on to ensure monotonicity. Q1 minimizes the battery-charger IC's power dissipation by dropping most of the IOUT voltage.

A similar version of this article appeared in the May 7, 1998 issue of EDN.

Given the mix of battery types and charging requirements in modern systems, chemistry-independent chargers are welcome tools for those who use and maintain portable equipment. Such chargers detect the installed battery's type and accordingly adjust the charging procedure.

Chemistry-independent chargers have several other benefits. For example, they enable OEMs to keep pace with battery developments without costly hardware changes. They also allow users to upgrade a product's battery rather than buy equipment. Also, for systems compatible with the Smart Battery System (SBS) specification, the specified standard interface for charger, battery, and host gives users a choice of any SBS-compatible battery.

Most chargers that can charge more than one battery type must be able to shift their output characteristic from voltage source to current source. They must also be able to monitor a battery's charging current and voltage and, in some cases, its temperature and charge time.

Consider Charging Requirements

The most common batteries in use today are NiCd, nickel-metal-hydride (NiMH), lithium-ion (Li-ion), and lead acid. NiCd and NiMH types require charging with a constant-current voltage source. To determine when the charge should terminate, the charger must detect a change in either battery voltage (dV/dt) or temperature (dT/dt). Li-ion and lead-acid batteries require charging with a voltage-limited current source, and the charger for those types must include a timer that terminates the charge after a specified time interval. Current-source accuracy in these applications is generally noncritical, but for Li-ion batteries the voltage-limit accuracy must be better than 1%.The need to shift on command between voltage source and current source is problematic, because the requirements conflict: The charger's output impedance should be low for a voltage source but high for a current source, resulting in different requirements for stabilization. This capability is difficult to achieve in a single circuit.

On the other hand, stability is often noncritical, because the charger's output voltage and current change slowly during a normal charging cycle. If, however, the charger's input source sees load changes that cause ripple or step changes in its output, as is likely with cheap wall-cube sources, then a compromise on loop stability can cause excessive ripple in the battery-charging voltage or current. This problem is acute for Li-ion batteries, which require tight tolerances on the applied voltage. If the charging voltage for a Li-ion battery is too low, the battery does not charge to its full capacity. If too high, the battery becomes permanently damaged, which is why Li-ion-battery manufacturers usually specify a charging-voltage accuracy better than 1%.

Detect Voltage and Temperature

For NiCd and NiMH batteries, the preferred method for determining when to terminate the charge cycle is to monitor changes in the battery voltage. For NiCd batteries, the terminal voltage remains relatively constant while charging and then peaks and falls off when the battery is fully charged. NiCd batteries should therefore terminate charging when the dV/dt goes negative. NiMH batteries should terminate when dV/dt equals zero; they behave like NiCd types, but the voltage falls off more slowly. As a backup measure in determining the end of charge, the manufacturers of NiCd and NiMH batteries usually recommend monitoring the battery's temperature as well as its voltage.Charging Li-ion and lead-acid batteries requires that the charger apply a constant current and then a constant voltage. The charger monitors the battery voltage to determine when this switchover should occur. A Li-ion or lead-acid battery charger must also monitor the battery voltage to minimize the time that the charger applies a regulated voltage to the battery, because a prolonged "float" interval can damage these batteries. Thus, a charger must sample the battery voltage for all four battery types, to determine the end of charge for NiCd and NiMH types and the switch from current to voltage regulation for lead-acid and Li-ion types.

Create a Smart Charger

To control charging, all chemistry-independent battery chargers require some form of "intelligence" residing in the battery or the charger. A microcontroller, for example, enables a "smart charger" to determine the battery type and to modify its charging routine as necessary.The battery pack itself requires no intelligence; it needs just a way to inform the charger of its chemistry type and its number of cells. The pack can provide this information using a keyed connector on the battery pack or a stored code that the charger can read. Either way, the charging routine can reside in software, and the installation of a new battery type requires simply updating the software. Thus, the charger can accommodate battery types that were unavailable when the charger debuted.

Committing charging procedures to software also allows manufacturers to extend the life of their products via software upgrades. The consumer can upgrade a battery pack, for example, by simply installing the software upgrade that comes with the battery upgrade. Software also allows manufacturers to upgrade a battery type without changing hardware while building the battery-powered product.

Charge-Control IC and ?C Make a Smart Charger

You can construct a smart charger with a low-cost ?C, such as the PIC16C73, and a chemistry-independent battery-charger controller, such as the MAX846 (Figure 1). In this case, the user has preset the charge controller (IC1) for charging Li-ion batteries. IC1 has an internal, 0.5%-accurate reference that enables the generation of internally preset regulation voltages (4.2V for one cell; 8.4V for two cells), and the controller drives anthe charging voltage and current.

Figure 1. A simple chemistry-independent battery charger combines a microcontroller, IC1, with a battery-charging controller, IC2, that employs linear regulation.

The PIC16C73 ?C, IC2, includes the PWM outputs CCP1 and CCP2. A filtered version of CCP2 drives IC1's VSET pin to control the voltage set point. The CCP1 PWM output controls battery current by setting the voltage on ISET. Using an internal A/D converter that you can access via its AN1 pin, the ?C monitors battery current by measuring the current in terms of voltage at IC1's ISET pin. The ?C monitors battery voltage by reading an internal A/D converter driven by the R5/R6 voltage divider.

The ?C operates at 4MHz, and, to achieve the required accuracy, its PWM output frequency is 25kHz. Each PWM output drives an RC filter followed by a unity-gain op-amp buffer. A 3.3V low-dropout regulator that depends on a reference powers the ?C (both internal to IC1), so the ?C's PWM outputs track this reference. Powering the ?C in this way improves accuracy, because this approach causes the PWM outputs to track variations in the reference voltage.

The voltage at VSET (Pin 6 of IC1), which connects to the internal 1.65V reference through a 20-kilohm resistor, determines the charger's voltage limit. This voltage is the filtered PWM output from the ?C's CCP2 output, and equals 3.3V multiplied by CCP2's duty cycle, D2:

The charger's voltage limit is

VLIMIT is adjustable over a range VADJ, where

The value of R1 in Figure 1 (825 kilohms) makes this adjustment range approximately 4.7%.

By setting the state of CELL2, Pin 10 of IC1, the user sets the nominal voltage limit at 4.2V or 8.4V, making the charger compatible with a single- or two-cell Li-ion battery. A small adjustment range enables the charger to accommodate the manufacturer-recommended limits. Limiting VADJ to approximately 10% of VLIMIT ensures 1% accuracy for the float voltage, even with a 1% resistor for R1.

This charger can also handle NiCd and NiMH batteries, because they require no float voltage. The maximum charging voltage these types require is typically 1.75V per cell. Thus, the charger of Figure 1 can handle a NiCd or NiMH battery of as many as four cells.

The current sourced by IC1's ISET pin controls the charging current. This current equals 1?A for every millivolt across the current-sense resistor, R2. Terminating ISET with a resistor produces a voltage, and regulating this voltage to 1.65V regulates the charging current. The 20-kilohm values for R3 and R4 set the ISET impedance at 10 kilohms and set the no-load voltage at 3.3 x D1, where D1 is the CCP1 duty cycle. The charging current is as follows:

This relationship sets the charging current to zero when the CCP1 duty cycle is 1 and when the output remains high. The circuit provides the maximum charging current when the duty cycle is zero. Thus, the maximum current is 0.165/R2 = 825mA.

Deal with Temperature Effects

Though fine as a standalone charger, the arrangement in Figure 1 may be unsuitable for portable equipment because of the temperature rise associated with power dissipation in the pnp transistor. This dissipation equals the product of the charging current times the difference between the input voltage and the battery voltage. You can minimize this troublesome rise in temperature by using a switching power supply (Figure 2), whose greater efficiency allows a step-down from input voltage to battery voltage with less power dissipation and therefore at a lower temperature. As in Figure 1, a PIC16C73 ?C controls the chemistry-independent charger (MAX1648). The ?C's A/D-converter input, AN0, monitors battery voltage via the resistor divider R4/R5, and the ?C's PWM outputs set limits for the charging current and voltage.

Figure 2. Substituting a switch-mode controller for the linear-regulator controller in Figure 1 produces a relatively efficient circuit whose lower operating temperature lets it operate as part of a portable system.

To achieve the 1% accuracy that Li-ion batteries require, the system includes a 0.2%, 4.096V external reference, IC3. This reference sets the charging levels for IC1 and the PWM-output voltage levels through three SPDT analog switches, IC2. To avoid excessive loading of the reference voltage, which could affect PWM-output accuracy, the ?C receives power from the 5V VL regulator internal to IC1 instead of from the reference. As in Figure 1, the ?C runs at 4MHz, and the PWM outputs run at 25kHz.

A lowpass filter (R1/C1) develops the DC voltage that controls charging voltage via IC1's SETV input. A similar filter, which develops a DC voltage for control of the charging current at the SETI input, also includes a 1-to-4 voltage divider (R2/R3) that establishes the required level of one-fourth the reference voltage. Resistors with 5% accuracy are adequate for achieving the 10%-accuracy charging-current requirement.

The limit for maximum charging voltage is four times the reference voltage, or 16.384V. The maximum allowed charging current depends on the 0.1-ohm current-sense resistor and the current-sense threshold internal to IC1 (0.185V): 0.185V/0.1 ohm = 1.85A. Charger operation depends on the voltage at THM (Pin 9), which in turn depends on the position of the top switch in IC2, which the ?C's RA1 output controls. Connecting THM to the thermistor causes IC1 to shut down when the battery temperature is too high or too low. Connecting THM to ground shuts off the charger.

Smart Batteries

The information necessary to charge the battery in an SBS resides in the battery pack itself, which implements the proper charging sequence by controlling the charger. The charger and the host system, therefore, need not know either the battery's type or its state of charge. A smart charger is unnecessary, but the battery pack must be intelligent. Thus, the battery pack in an SBS "knows" the charging algorithm it requires. The pack "talks" to the charger through the SMBus, which is an extension of the I2C bus that provides communications within the system. This configuration applies to batteries and equipment compatible with the SBS specification.The chemistry-independent battery-charger IC (MAX1647) in Figure 3 is a controller for smart-battery chargers, and it has an SMBus interface compatible with the smart-battery specification. In stepping down from an input voltage to the required voltage or current, the IC provides drive for two external MOSFETs—the switching transistor and the synchronous rectifier—from the DH and DL outputs, respectively. The switch-mode controller offers better efficiency than a linear type, and the synchronous rectifier offers better efficiency than a diode rectifier at lower battery voltages. These gains in efficiency minimize the circuit's operating temperature and thereby optimize it for portable equipment.

Figure 3. A chemistry-independent battery charger that conforms to the Smart Battery System specification includes a chemistry-independent charger-control IC and an SMBus interface.

To achieve the necessary accuracy, a linear current source generates charging currents of 1mA to 31mA. When the switch-mode current source turns on to provide higher charging currents, this linear source remains on to ensure monotonicity. Q1 minimizes the battery-charger IC's power dissipation by dropping most of the IOUT voltage.

A similar version of this article appeared in the May 7, 1998 issue of EDN.

电子发烧友App

电子发烧友App

评论